The basic formal ontology (BFO) offers a simple, elegant process model. It adds alethic and teleological semantics to the more procedural models, among which I would include NIST’s process specification language (PSL) along with BPMN.

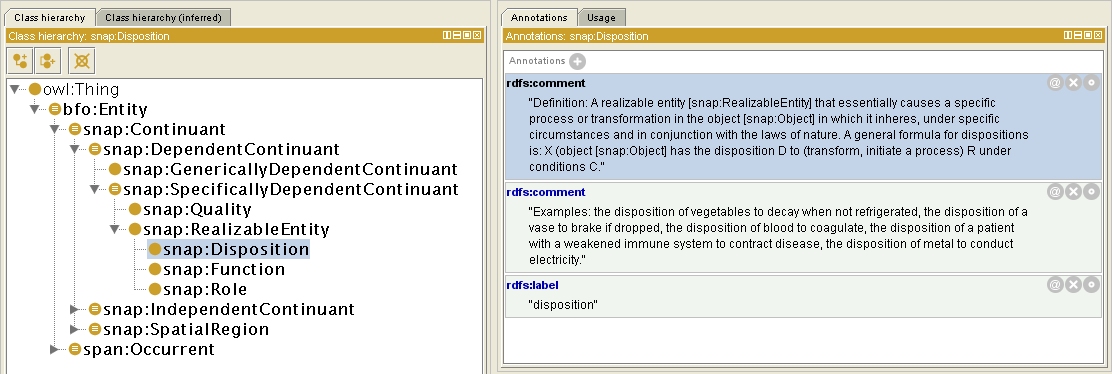

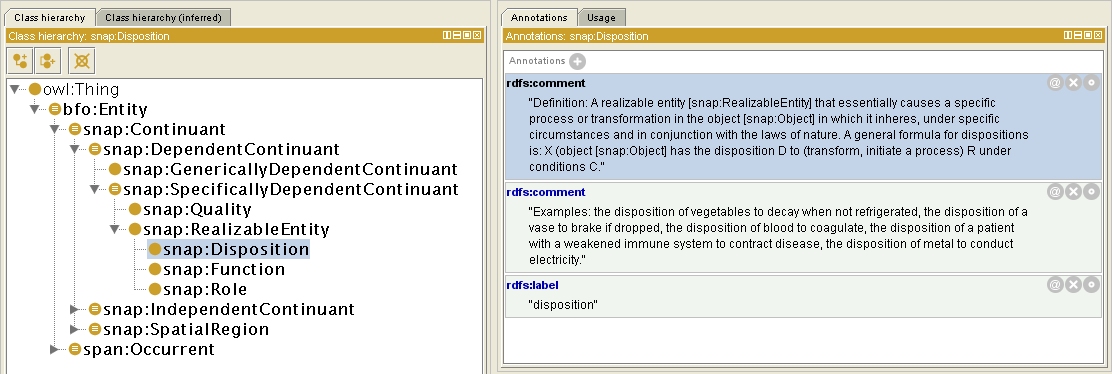

Although alethic typically refers to necessary vs. possible, it clearly subsumes the probable or expected (albeit excluding deontics0). For example, consider the notion of ‘disposition’ (shown below as rendered in Protege):

For example, cells might be disposed to undergo the cell cycle, which consists of interphase, mitosis, and cytokinesis. Iron is disposed to rust. Certain customers might be disposed to comment, complain, or inquire.

Disposition is nice because it reflects things that have an unexpected high probability of occurring1 but that may not be a necessary part of a process. It seems, however, that disposition is lacking from most business process models. It is prevalent in the soft and hard sciences, though. And it is important in medicine.

Disposition is distinct from what should occur or be attempted next in a process. Just because something is disposed to happen does not mean that it should or will. Although disposition is clearly related to business events and processes, it seems surprisingly lacking from business models (and CEP/BPM tooling).2

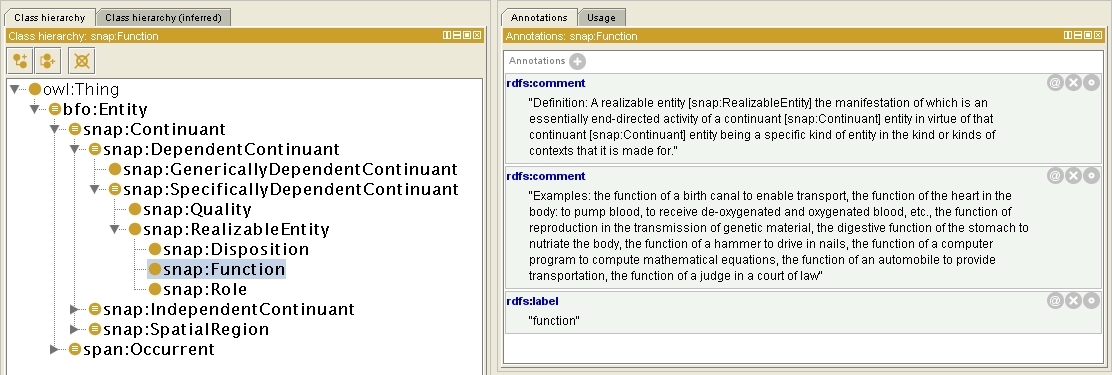

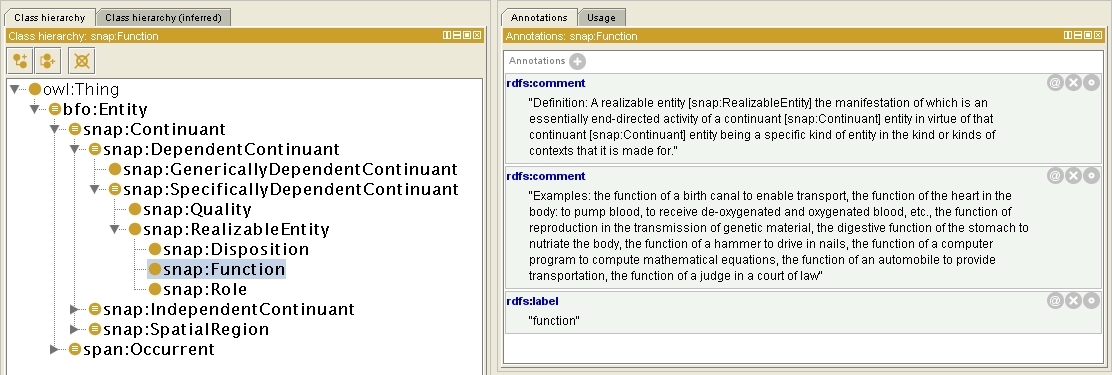

A teleological aspect of BFO is the notion of purpose or intended ‘function’, as shown below:

Function is about what something is expected to do or what it is for. For example, what is the function of an actuary? Representing such functionality of individuals or departments within enterprises may be atypical today, but is clearly relevant to skills-based routing, human resource optimization and business modeling in general.

Function is about what something is expected to do or what it is for. For example, what is the function of an actuary? Representing such functionality of individuals or departments within enterprises may be atypical today, but is clearly relevant to skills-based routing, human resource optimization and business modeling in general.

Understanding disposition and function is clearly relevant to business modeling (including organizational structure), planning and performance optimization. Without an understanding of disposition, anticipation and foresight will be lacking. Without an understanding of function, measurement, reporting, and performance improvement will be lacking.

0 SBVR does a nice job with alethic and deontic augmentation of first order logic (i.e., positive and negative necessity, possibility, permission, and preference).

1 Thanks to BG for “politicians are disposed to corruption” which indicates a population that is more likely than a larger population to be involved in certain situations.

2 Cyc’s notion of ‘disposition’ or ‘tendency’ is focused on properties rather than probabilities, as in the following citation from OpenCyc. Such a notion is similarly lacking from most business models, probably because its utility requires more significant reasoning and business intelligence than is common within enterprises.

The collection of all the different quantities of dispositional properties; e.g. a particular degree of thermal conductivity. The various specializations of this collection are the collections of all the degrees of a particular dispositional property. For example, ThermalConductivity is a specialization of this collection and its instances are usually denoted with the generic value functions as in (HighAmountFn ThermalConductivity).

Ian Ayres, the author of Super Crunchers, gave a keynote at Fair Isaac’s Interact conference in San Francisco this morning. He made a number of interesting points related to his thesis that intuitive decision making is doomed. I found his points on random trials much more interesting, however.

Ian Ayres, the author of Super Crunchers, gave a keynote at Fair Isaac’s Interact conference in San Francisco this morning. He made a number of interesting points related to his thesis that intuitive decision making is doomed. I found his points on random trials much more interesting, however.