At the SemTech conference last week, a few companies asked me how to respond to IBM’s Watson given my involvement with rapid knowledge acquisition for deep question answering at Vulcan. My answer varies with whether there is any subject matter focus, but essentially involves extending their approach with deeper knowledge and more emphasis on logical in additional to textual entailment.

Today, in a discussion on the LinkedIn NLP group, there was some interest in finding more technical details about Watson. A year ago, IBM published the most technical details to date about Watson in the IBM Journal of Research and Development. Most of those journal articles are available for free on the web. For convenience, here are my bookmarks to them.

- Question analysis: How Watson reads a clue

- Deep parsing in Watson

Good technical details on the two parsing approaches taken. Using deep parsing, such as we have (e.g., using the ERG in Project Sherlock) and disambiguation is a viable approach for the background knowledge that IBM dismisses too quickly, however (see below). Note that in order to train NLP systems in new domains, you have to go through the same process for thousands of sentences, so disambiguation technology as in the Linguist is appropriate even if proofs are based more on textual entailment than logical deduction. - Textual resource acquisition and engineering

- Automatic knowledge extraction from documents

- Finding needles in the haystack: Search and candidate generation

- Typing candidate answers using type coercion

- Textual evidence gathering and analysis

- Relation extraction and scoring in DeepQA

- Structured data and inference in DeepQA

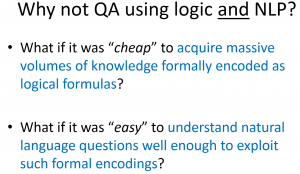

IBM takes a hard line against deep knowledge here. They have the upper hand in the argument due to their impressive results, but more precise knowledge would only improve their performance. You can find more on this debate in the Deep QA FAQ and this presentation which includes the the followings slide:

Continue reading “Deep question answering: Watson vs. Aristotle”

Continue reading “Deep question answering: Watson vs. Aristotle”