A decade or so ago, we were debating how to educate Paul Allen’s artificial intelligence in a meeting at Vulcan headquarters in Seattle with researchers from IBM, Cycorp, SRI, and other places.

We were talking about how to “engineer knowledge” from textbooks into formal systems like Cyc or Vulcan’s SILK inference engine (which we were developing at the time). Although some progress had been made in prior years, the onus of acquiring knowledge using SRI’s Aura remained too high and the reasoning capabilities that resulted from Aura, which targeted University of Texas’ Knowledge Machine, were too limited to achieve Paul’s objective of a Digital Aristotle. Unfortunately, this failure ultimately led to the end of Project Halo and the beginning of the Aristo project under Oren Etzioni’s leadership at the Allen Institute for Artificial Intelligence.

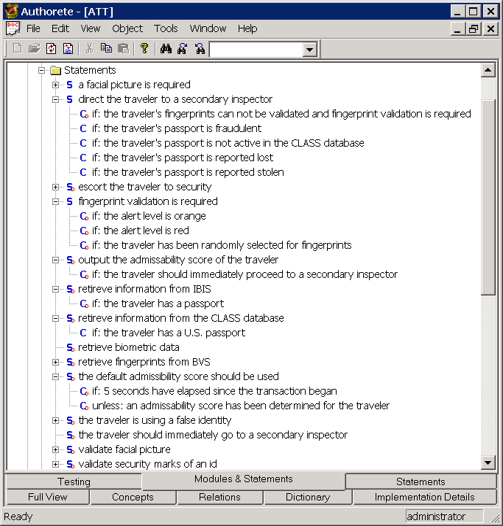

At that meeting, I brought up the idea of simply translating English into logic, as my former product called “Authorete” did. (We renamed it before Haley Systems was acquired by Oracle, prior to the meeting.)