In preparing for my workshop at the Business Rules Forum in Las Vegas on November 5th, I have focused on the following needs in reasoning about processes, about events, and about or over time:

- Reasoning at a point within a [business] process

- Reasoning about events that occur over time.

- Reasoning about a [business] process (as in deciding what comes next)

- Reasoning about and across different states (as in planning)

Enterprise decision management (EDM) addresses the first. Complex event processing (CEP) is concerned with the second. In theory, EDM could address the third but it does not in practice. This third item includes the issue of governing and defining workflow or event-driven business processes rather than point decisions within such business processes.

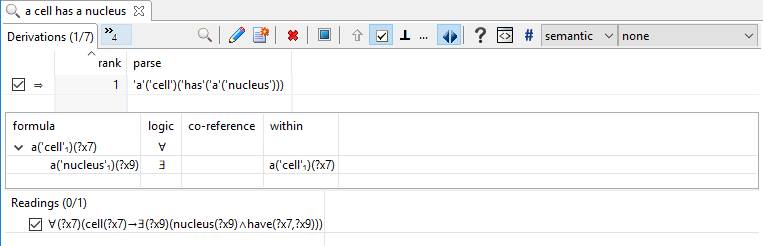

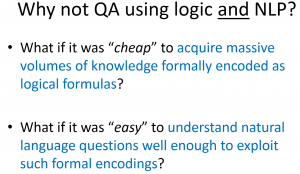

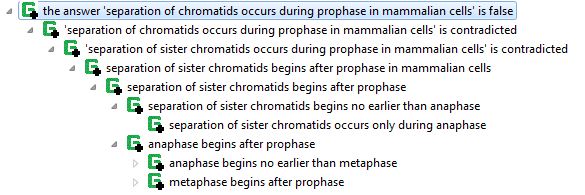

Business applications of rules have not advanced to include the fourth item. That is to say, business has yet to significantly leverage reasoning or problem solving techniques that are common in artificial intelligence. For example, artificially intelligent question and answer systems, which are being developed for the semantic web, can do more than retrieve data – they perform inference. Commercial database and business intelligence queries are typically much less intelligent, which presents a number of opportunities that I don’t want to go into here but would happy to discuss with interested parties. The point here is that business does not use reasoning much at all, let alone to search across the potential ramifications of alternative decisions or courses of action before making or taking one. Think of playing chess or a soccer-playing robot planning how to advance the ball on goal. Why shouldn’t business strategies or tactical business decisions benefit from a little simulated look-ahead along with a lot of inference and evaluation?

Even though I have recently become more interested in the fourth of these areas, I expect the audience at the business rules forum to be most interested in the first two points above. There will also be some who have enough experience with complex business processes, which are common in larger enterprises. These folks will be interested in the third item. Only the most advanced applications, such as in biochemical process planning, will be interested in the fourth. I don’t expect many of them to attend!

The notion of enterprise decision management (EDM) is focused on point decision making within a business process. For enterprises that are concerned with governing business processes, a model of the process itself must be available to the business rules that govern its operation. I’ve written elsewhere about the need for an ontology of events and processes in order to effectively integrate business process management (BPM) with business rules. Here, and in the workshop, I intend to get a little more specific about the requirements, what is lacking in current standards and offerings, and what we’re trying to do about it. Continue reading “Time for the next generation of knowledge automation”