Many users land up wanting to import sentences in the Linguist rather than type or paste them in one at a time. One way to do this is to right click on a group within the Linguist and import a file of sentences, one per line, into that group. But if you want to import a large document and retain its outline structure, some application-specific use of the Linguist APIs may be the way to go.

We’ve written up how to import a whole web site on California Sales & Use Tax:

There are quite a few ways to do this, but we took the step by step approach of:

- walk the web site and extract the content of the law into a number of files

- process the files into a the content of individual sections within an outline structure

- break the content down into individual sentences

- create the outline structure and populate it with the sentences

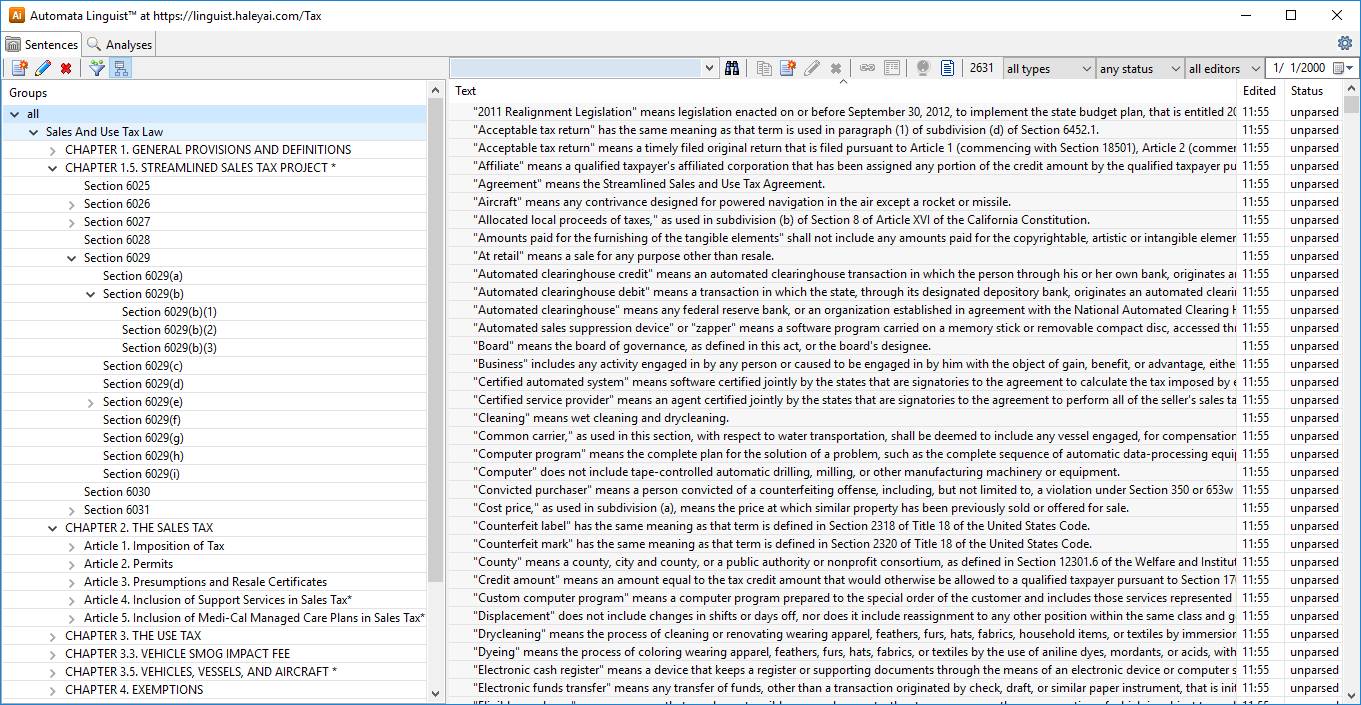

The resulting knowledge base is ready for text analysis, automated parsing, and disambiguation, as shown here:

Our favorite case is importing pages from web sites, such as Wikipedia or regulatory text that appears on-line. For the most part, the text is available as HTML. In some cases, it’s available as Word documents. And sometimes it’s only available as PDF.

- PDF documents can be saved as text, imported into Word, or read programmatically

- Microsoft Word is no problem since it can be saved as HTML

- The Linguist has utilities that extract the HTML from ePub textbooks

- The Linguist also has utilities that extract text from PDFs, but PDFs typically require more work

- PDF can be saved to text (possibly with in-line headers & footers)

- PDF can be imported by Word (sometimes losing headers & flow)

- Mutli-column PDF can be difficult if the flow of the document is not clear

- PDF can be difficult if there is excess hyphenation due to even justification

- HTML can be transformed into XML which is easy to process in various languages (e.g., Java).

In the article we discuss a number of finer points, especially with regard to tokenization and sentence splitting.