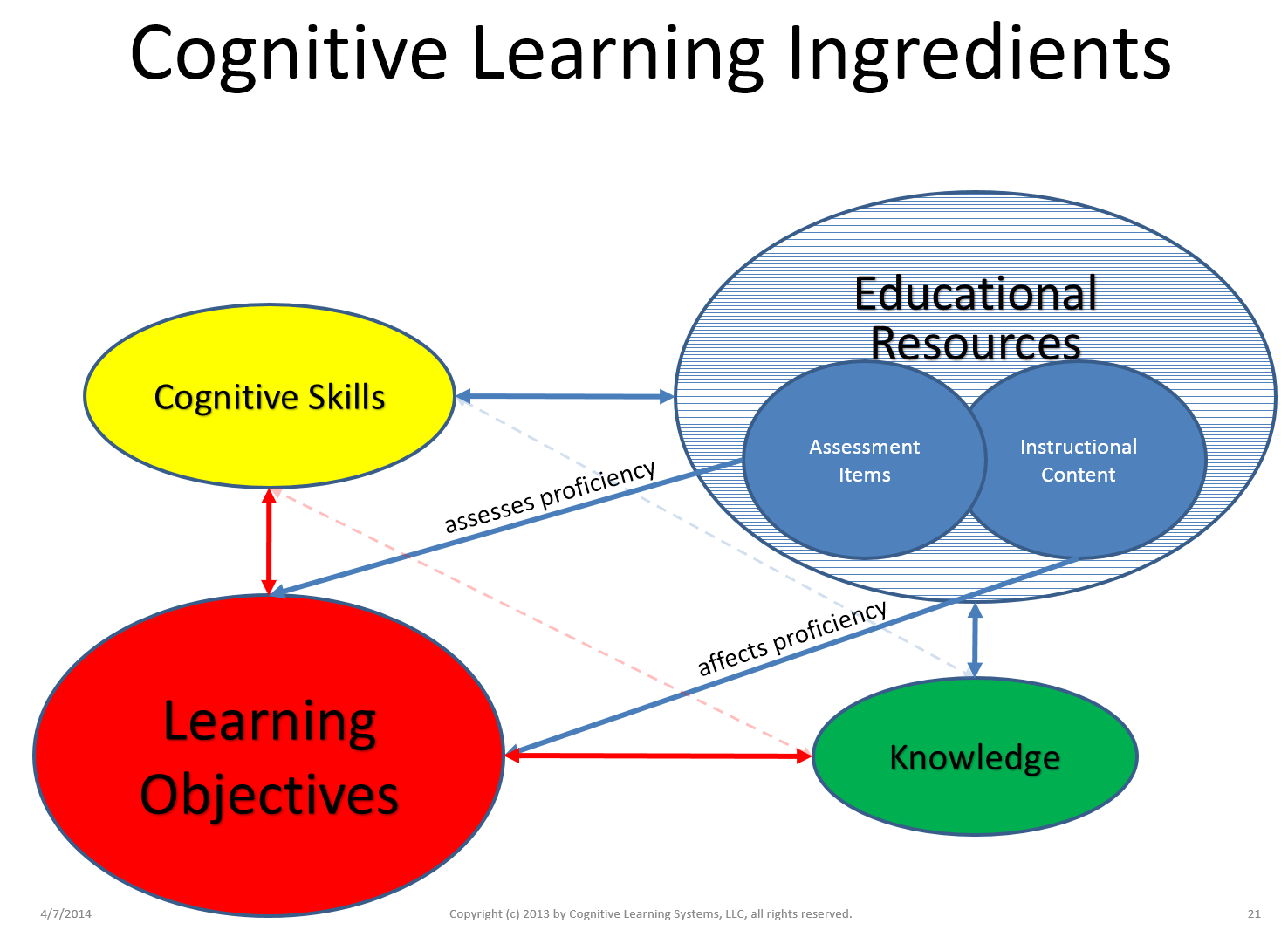

Thesis: the overwhelming investment in educational technology will have its highest economic and social impact in technology that directly increases the rate of learning and the amount learned, not in technology that merely provides electronic access to abundant educational resources or on-line courses. More emphasis is needed on the cognitive skills and knowledge required to achieve learning objectives and how to assess them.

Cognitive modeling of assessment items

Look for a forthcoming post on the broader subject of personalized e-learning. In the meantime, here’s a tip on writing good multiple choice questions:

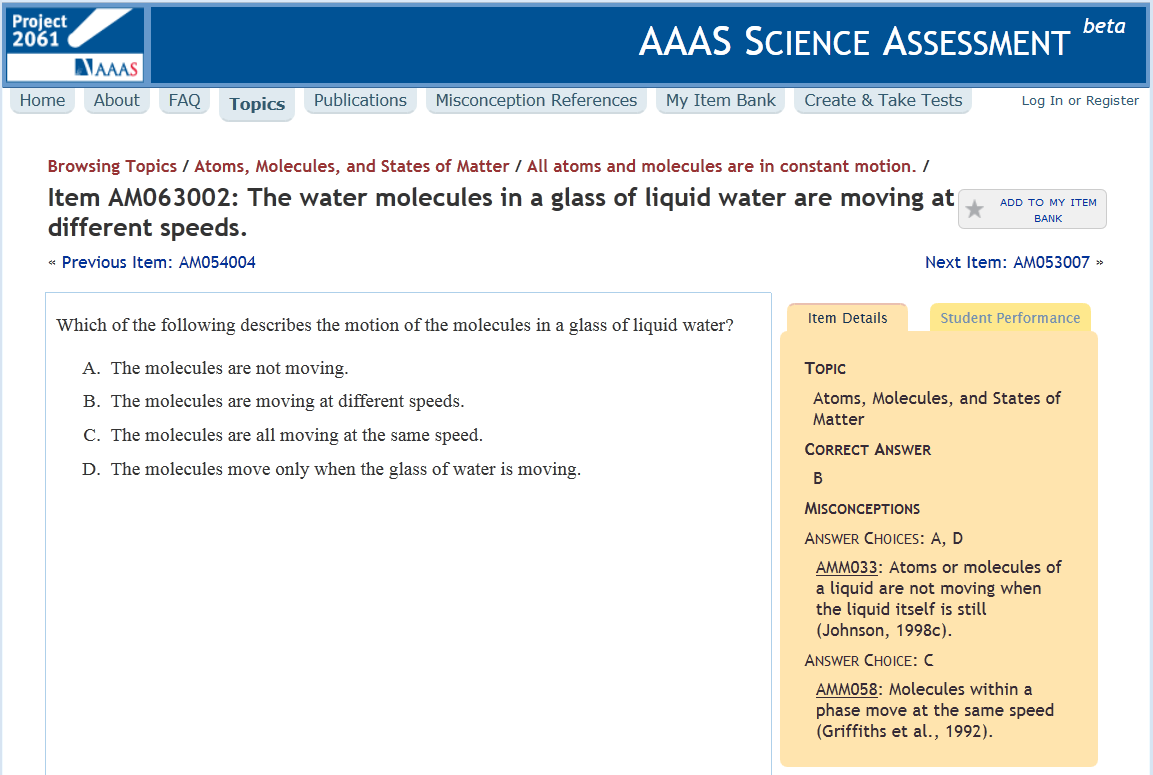

- target wrong answers to diagnose misconceptions.

Better approaches to adaptive education model the learning objectives that are assessed by questions such as the following from the American Academy for the Advancement of Science which goes the extra mile to also model the misconceptions underlying incorrect answers:

Suggested questions: Inquire vs. Knewton

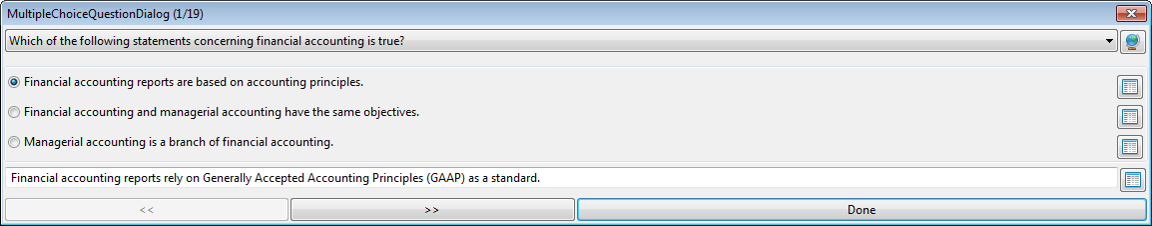

Knewton is an interesting company providing a recommendation service for adaptive learning applications. In a recent post, Jonathon Goldman describes an algorithmic approach to generating questions. The approach focuses on improving the manual authoring of test questions (known in the educational realm as “assessment items“). It references work at Microsoft Research on the problem of synthesizing questions for a algebra learning game.

We agree that more automated generation of questions can enrich learning significantly, as has been demonstrated in the Inquire prototype. For information on a better, more broadly applicable approach, see the slides beginning around page 16 in Peter Clark’s invited talk.

What we think is most promising, however, is understanding the reasoning and cognitive skill required to answer questions (i.e., Deep QA). The most automated way to support this is with machine understanding of the content sufficient to answer the questions by proving answers (i.e., multiple choices) right or wrong, as we discuss in this post and this presentation.

Automatic Knowledge Graphs for Assessment Items and Learning Objects

As I mentioned in this post, we’re having fun layering questions and answers with explanations on top of electronic textbook content.

The basic idea is to couple a graph structure of questions, answers, and explanations into the text using semantics. The trick is to do that well and automatically enough that we can deliver effective adaptive learning support. This is analogous to the knowledge graph that users of Knewton‘s API create for their content. The difference is that we get the graph from the content, including the “assessment items” (that’s what educators call questions, among other things). Essentially, we parse the content, including the assessment items (i.e., the questions and each of their answers and explanations). The result of this parsing is, as we’ve described elsewhere, precise lexical, syntactic, semantic, and logic understanding of each sentence in the content. But we don’t have to go nearly that far to exceed the state of the art here. Continue reading “Automatic Knowledge Graphs for Assessment Items and Learning Objects”