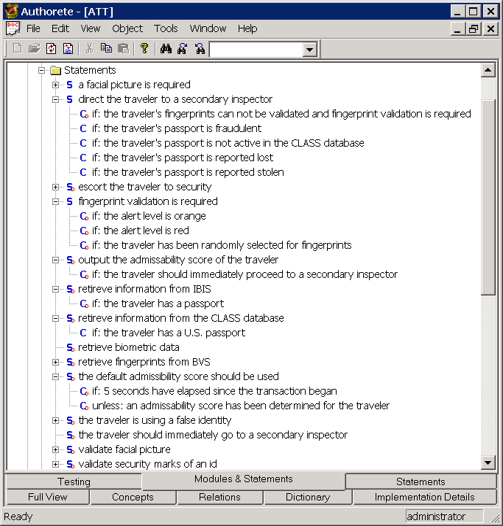

Working on translating some legal documentations (sales and use tax laws and regulations) into compliance logic, we came across the following sentence (and many more that are even worse):

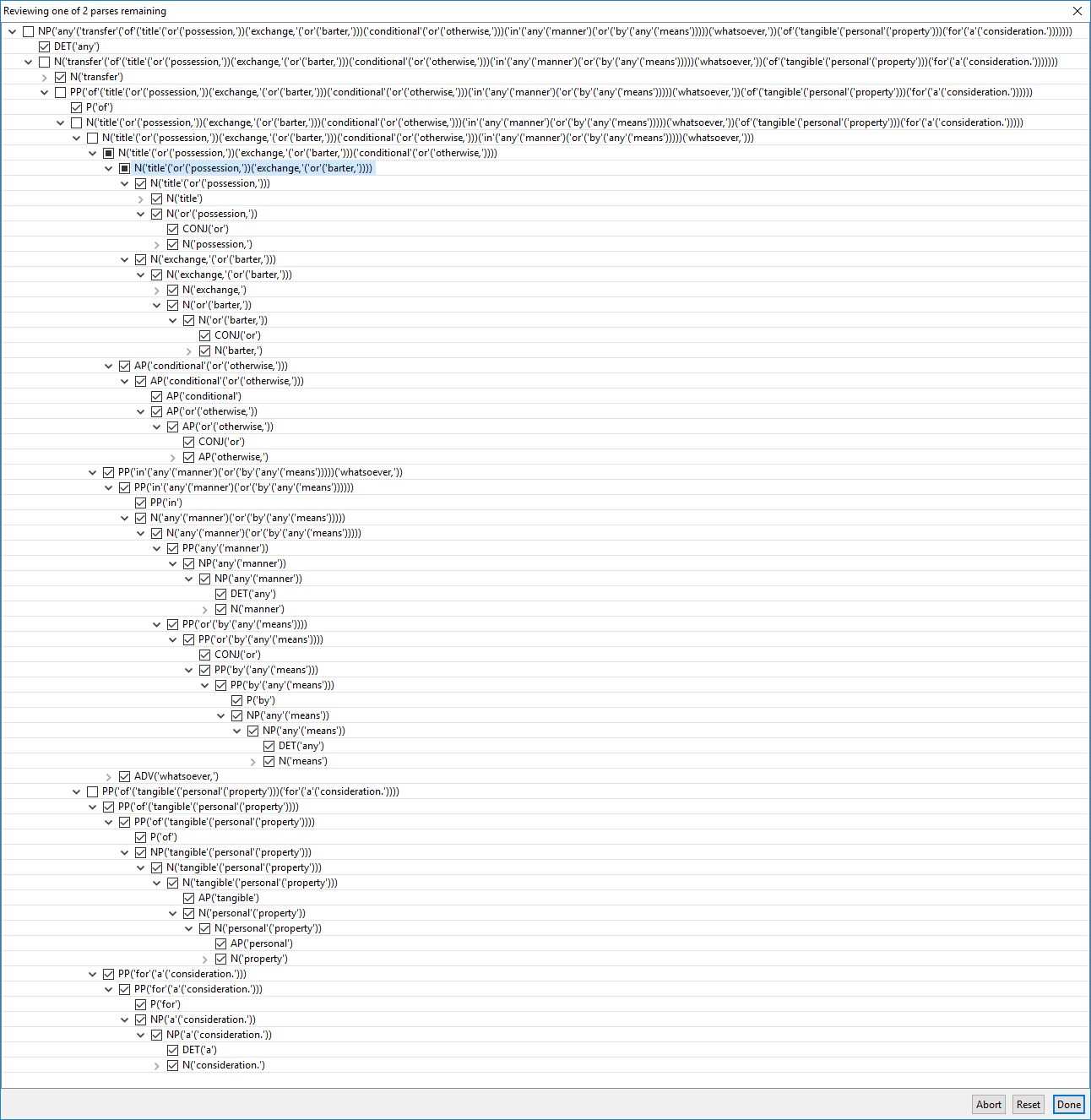

- Any transfer of title or possession, exchange, or barter, conditional or otherwise, in any manner or by any means whatsoever, of tangible personal property for a consideration.

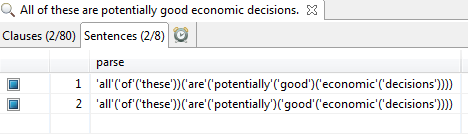

Natural language processing systems choke on sentences like this because of such sentences’ combinatorial ambiguity and NLP’s typical lack of knowledge about what can be conjoined or complement or modify what.

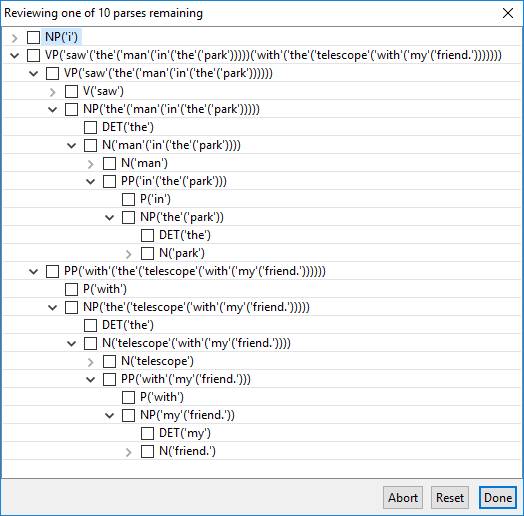

This sentences has many thousands of possible parses. They involve what the scopes of each of the the ‘or’s are and what is modified by conditional, otherwise, or whatsoever and what is complemented by in, by, of, and for.

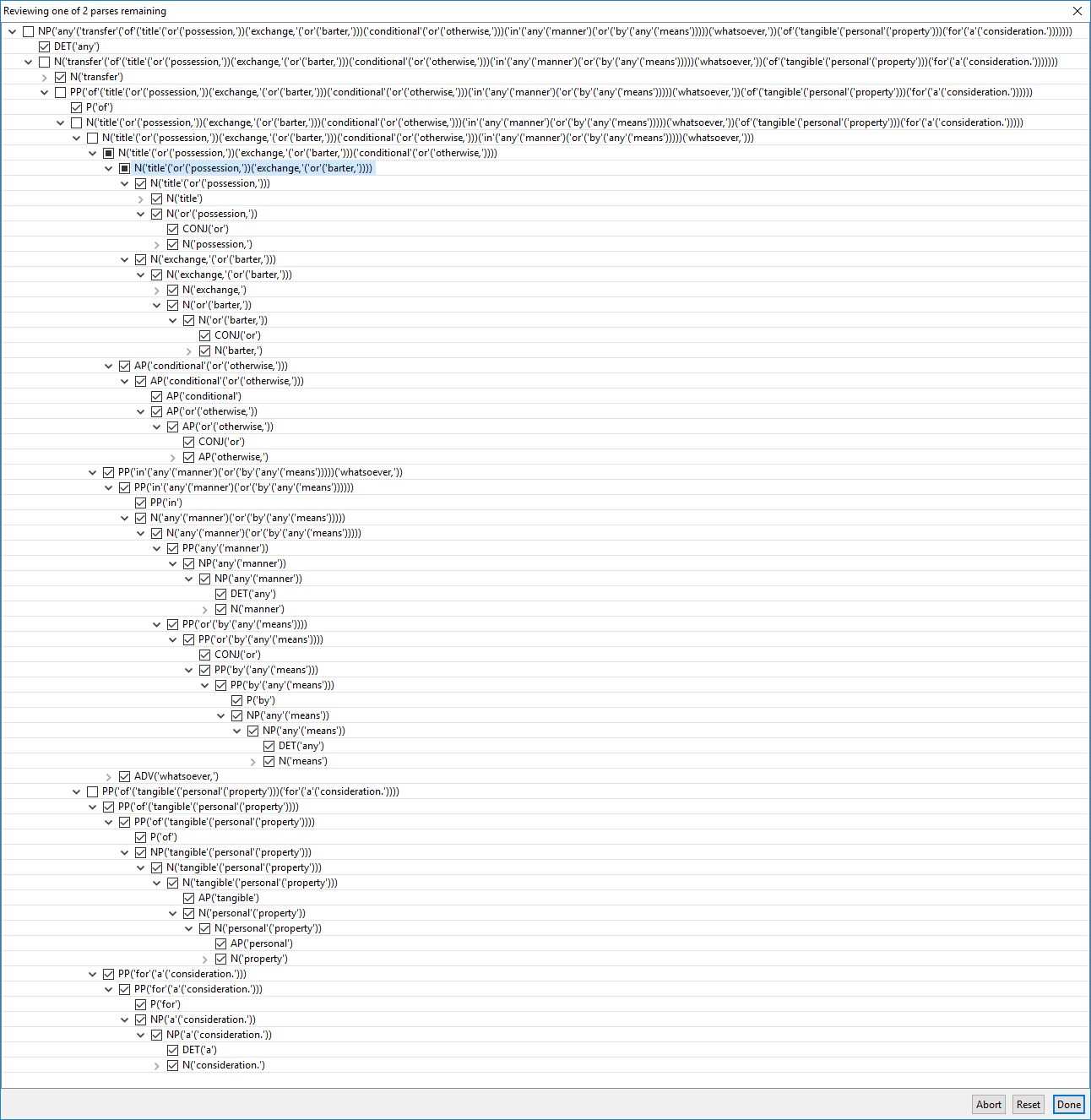

The following shows 2 parses remaining after we veto a number of mistakes and confirm some phrases from the 400 highest ranking parses (a few right or left clicks of the mouse):

Continue reading “Combinatorial ambiguity? No problem!”