Going on 5 years ago, I wrote part 1. Now, finally, it’s time for the rest of the story.

Continue reading “Confessions of a production rule vendor (part 2)”

systems that know and understand and think and learn

Going on 5 years ago, I wrote part 1. Now, finally, it’s time for the rest of the story.

Continue reading “Confessions of a production rule vendor (part 2)”

A decade or so ago, we were debating how to educate Paul Allen’s artificial intelligence in a meeting at Vulcan headquarters in Seattle with researchers from IBM, Cycorp, SRI, and other places.

We were talking about how to “engineer knowledge” from textbooks into formal systems like Cyc or Vulcan’s SILK inference engine (which we were developing at the time). Although some progress had been made in prior years, the onus of acquiring knowledge using SRI’s Aura remained too high and the reasoning capabilities that resulted from Aura, which targeted University of Texas’ Knowledge Machine, were too limited to achieve Paul’s objective of a Digital Aristotle. Unfortunately, this failure ultimately led to the end of Project Halo and the beginning of the Aristo project under Oren Etzioni’s leadership at the Allen Institute for Artificial Intelligence.

At that meeting, I brought up the idea of simply translating English into logic, as my former product called “Authorete” did. (We renamed it before Haley Systems was acquired by Oracle, prior to the meeting.)

This is a must-watch video from the Allen Institute for AI for anyone seriously interested in artificial intelligence. It’s 70 minutes long, but worth it. Some of the highlights from my perspective are:

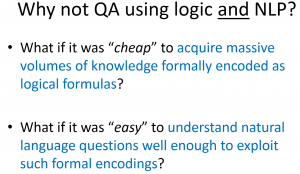

The astute viewer and blog reader will recognize this slide as discussed by Oren Etzioni here.

Thanks to John Sowa‘s comment on LinkedIn for this link which, although slightly dated, contains the following:

In August, I had the chance to speak with Peter Norvig, Director of Google Research, and asked him if he thought that techniques like deep learning could ever solve complicated tasks that are more characteristic of human intelligence, like understanding stories, which is something Norvig used to work on in the nineteen-eighties. Back then, Norvig had written a brilliant review of the previous work on getting machines to understand stories, and fully endorsed an approach that built on classical “symbol-manipulation” techniques. Norvig’s group is now working within Hinton, and Norvig is clearly very interested in seeing what Hinton could come up with. But even Norvig didn’t see how you could build a machine that could understand stories using deep learning alone.

Other quotes along the same lines come from Oren Etzioni in Looking to the Future of Data Science:

This is a significant statement from one of the best people in fact extraction on the planet!

As you know from elsewhere on this blog, I’ve been involved with the precursor to the AIAI (Vulcan’s Project Halo) and am a fan of Watson. But Watson is the best example of what Big Data, Deep Learning, fact extraction, and textual entailment aren’t even close to:

Sure, you can rationalize these things and hope that someday the machine will not need reliable knowledge (or that it will induce enough information with enough certainty). IBM does a lot of this (e.g., see the source of the quotes above). That day may come, but it will happen a lot sooner with curated knowledge.

Knewton is an interesting company providing a recommendation service for adaptive learning applications. In a recent post, Jonathon Goldman describes an algorithmic approach to generating questions. The approach focuses on improving the manual authoring of test questions (known in the educational realm as “assessment items“). It references work at Microsoft Research on the problem of synthesizing questions for a algebra learning game.

We agree that more automated generation of questions can enrich learning significantly, as has been demonstrated in the Inquire prototype. For information on a better, more broadly applicable approach, see the slides beginning around page 16 in Peter Clark’s invited talk.

What we think is most promising, however, is understanding the reasoning and cognitive skill required to answer questions (i.e., Deep QA). The most automated way to support this is with machine understanding of the content sufficient to answer the questions by proving answers (i.e., multiple choices) right or wrong, as we discuss in this post and this presentation.

Orin Etzioni is a marvelous choice to lead the Allen Institute for AI (aka AI2). The NL/ML path is the right path for scaling up the deep knowledge that Paul Allen’s vision of a Digital Aristotle requires. You can read more about it below and here’s more background on the change in the direction and on some evidence that the path holds great promise.

Orin Etzioni is a marvelous choice to lead the Allen Institute for AI (aka AI2). The NL/ML path is the right path for scaling up the deep knowledge that Paul Allen’s vision of a Digital Aristotle requires. You can read more about it below and here’s more background on the change in the direction and on some evidence that the path holds great promise.

Over the last two years, machines have demonstrated their ability to read, listen, and understand English well enough to beat the best at Jeopardy!, answer questions via iPhone, and earn college credit on college advanced placement exams. Today, Google, Microsoft and others are rushing to respond to IBM and Apple with ever more competent artificially intelligent systems that answer questions and support decisions.

At the SemTech conference last week, a few companies asked me how to respond to IBM’s Watson given my involvement with rapid knowledge acquisition for deep question answering at Vulcan. My answer varies with whether there is any subject matter focus, but essentially involves extending their approach with deeper knowledge and more emphasis on logical in additional to textual entailment.

Today, in a discussion on the LinkedIn NLP group, there was some interest in finding more technical details about Watson. A year ago, IBM published the most technical details to date about Watson in the IBM Journal of Research and Development. Most of those journal articles are available for free on the web. For convenience, here are my bookmarks to them.

Continue reading “Deep question answering: Watson vs. Aristotle”

Continue reading “Deep question answering: Watson vs. Aristotle”Last week, I attended the FIBO (Financial Business Industry Ontology) Technology Summit along with 60 others.

The effort is building an ontology of fundamental concepts in the financial services. As part of the effort, there is surprisingly clear understanding that for the resulting representation to be useful, there is a need for logical and rule-based functionality that does not fit within OWL (the web ontology language standard) or SWRL (a simple semantic web rule language). In discussing how to meet the reasoning and information processing needs of consumers of FIBO, there was surprisingly rapid agreement that the functionality of Flora-2 was most promising for use in defining and exemplifying the use of the emerging standard. Endorsers including Benjamin Grosof and myself, along with a team from SRI International. Others had a number of excellent questions, such as concerning open- vs. closed-world semantics, which are addressed by support for the well-founded semantics in Flora-2 and XSB.

Thanks go to Vulcan for making the improvements to Flora and XSB that have been developed in Project Halo available to all!

In Vulcan’s Project Halo, we developed means of extracting the structure of logical proofs that answer advanced placement (AP) questions in biology. For example, the following shows a proof that separation of chromatids occurs during prophase.

This explanation was generated using capabilities of SILK built on those described in A SILK Graphical UI for Defeasible Reasoning, with a Biology Causal Process Example. That paper gives more details on how the proof structures of questions answered in Project Sherlock are available for enhancing the suggested questions of Inquire (which is described in this post, which includes further references). SILK justifications are produced using a number of higher-order axioms expressed using Flora‘s higher-order logic syntax, HiLog. These meta rules determine which logical axioms can or do result in a literal. (A literal is an positive or negative atomic formula, such as a fact, which can be true, false, or unknown. Something is unknown if it is not proven as true or false. For more details, you can read about the well-founded semantics, which is supported by XSB. Flora is implemented in XSB.)

Well, here’s a use case: Continue reading “Pedagogical applications of proofs of answers to questions”