We are using statistical techniques to increase the automation of logical and semantic disambiguation, but nothing is easy with natural language.

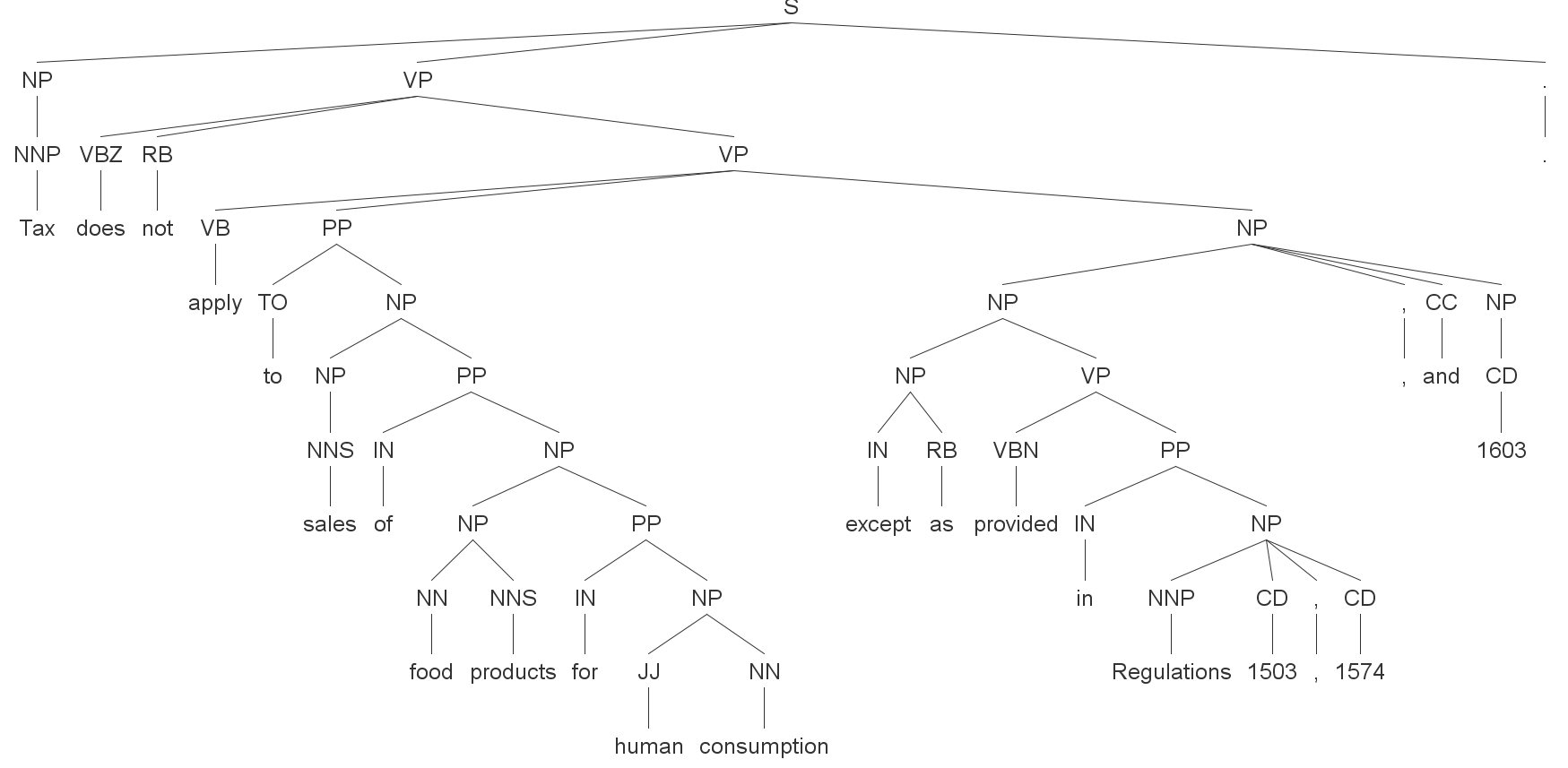

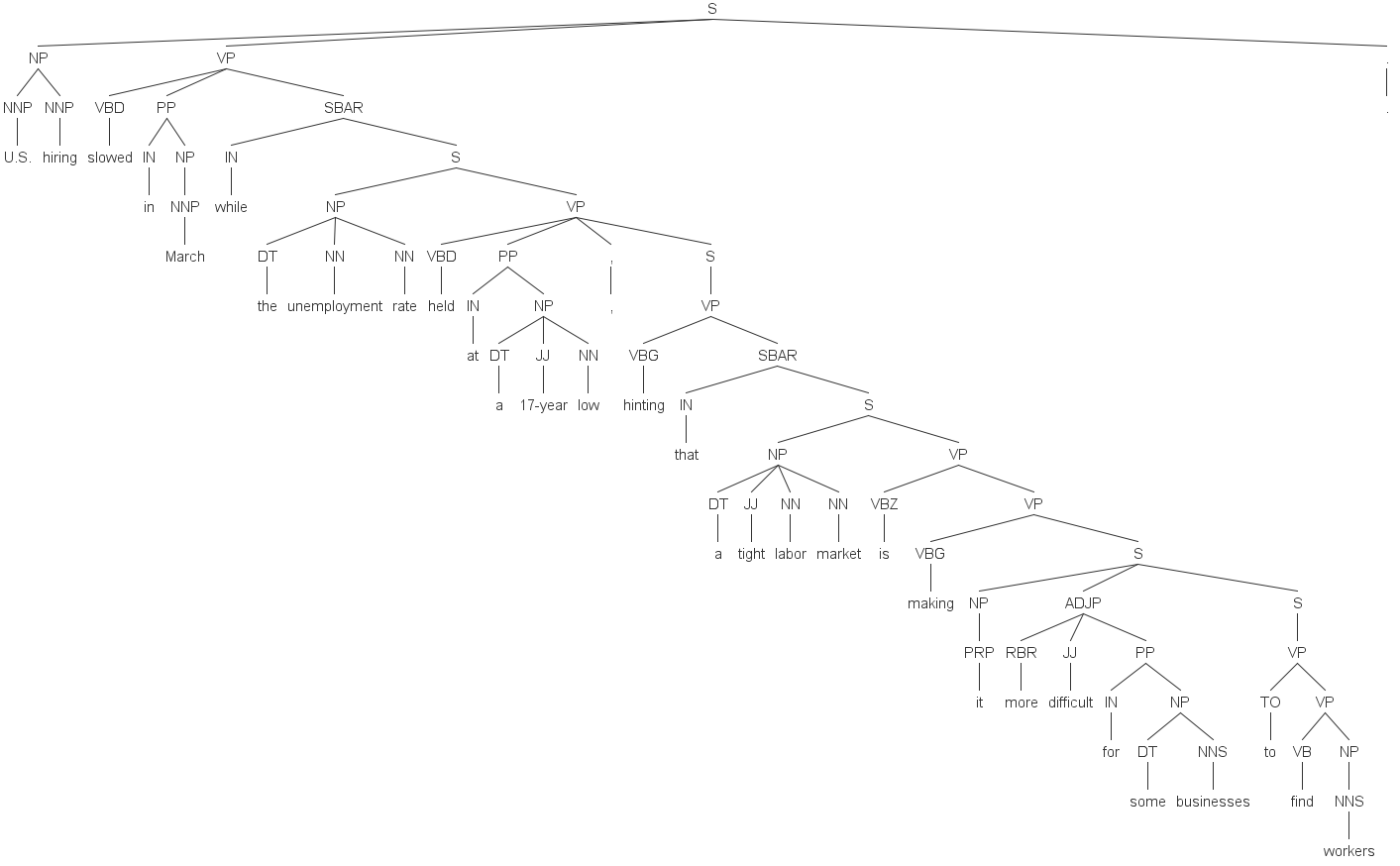

Here is the Stanford Parser (the probabilistic context-free grammar version) applied to a couple of sentences. There is nothing wrong with the Stanford Parser! It’s state of the art and worthy of respect for what it does well.

It just goes to show you how far NLP is from understanding.

Our software can’t get the right results without some help some of the time either. Nobody’s can.

That’s why a cognitive computing approach is needed when accuracy is imperative.