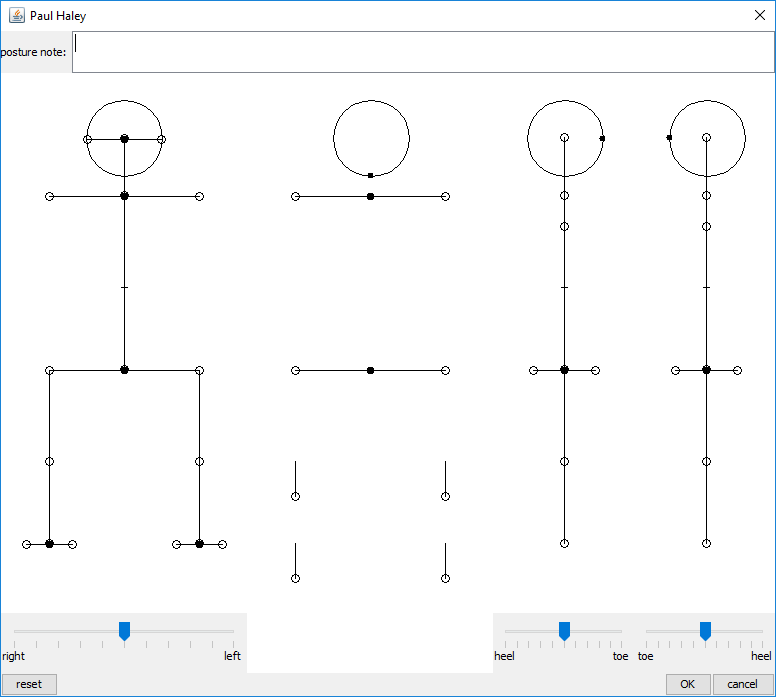

Over the last 25 years we have developed two generations of AI systems for physical therapy. The first was before the emergence of the Internet, when Windows was 16 bits. There were no digital cameras, either. So, physical therapists would take Polaroid pictures; anterior and left and right lateral pictures or simply eyeball a patient and enter their posture into the computer using a diagram like the following:

Therapists could also enter left-to-right balance and front-to-back weight bearing for each foot. In the anterior view you can see that pronation or supination could also be input. In the superior view, you can see that inverted or everted feet and knees were captured. In the lateral views you can also input shoulder protraction. We’ll skip over how spinal curvatures was considered here.

Today we use vision and a balance board and/or a foot pressure mat. First let’s go over the basics and then dive into the vision…

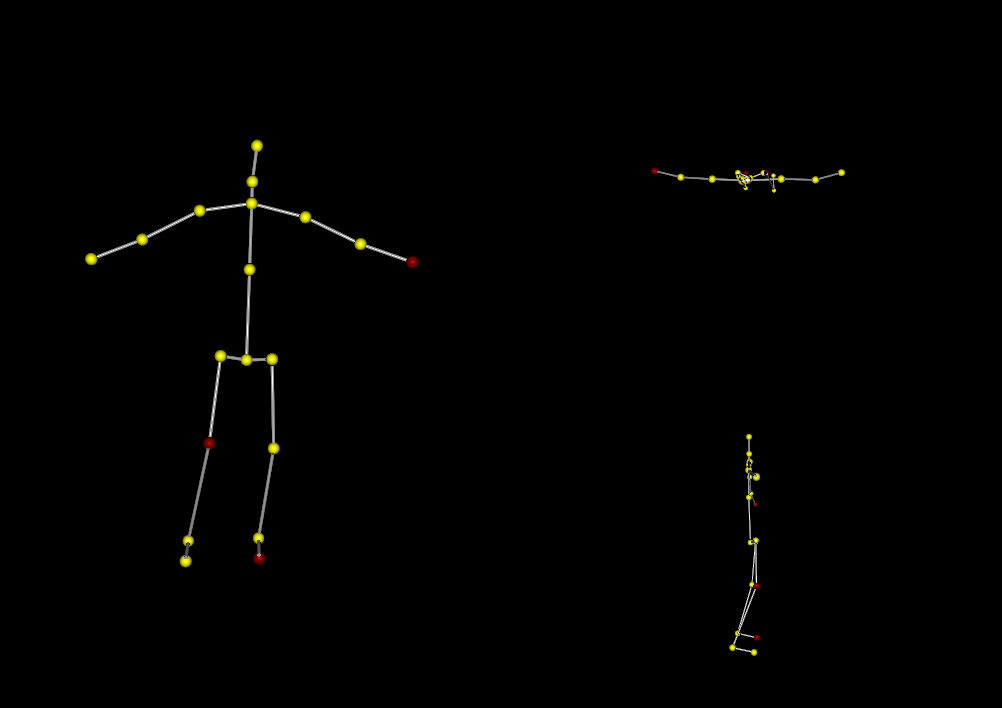

The following shows the estimated locations of a person’s joints from three of the four perspectives diagrammed above. Practically speaking, this is the same information rendered a different way, but it’s a lot easier to capture and the subjectivity is gone. Polaroids are long gone, but the vision system also eliminates loading of files from digital cameras.

Getting the skeleton to be accurate enough for clinical purposes was not trivial. The camera has to be carefully set up on a tripod with the right pitch and no roll, for example. Easy said than done across dozens of clinics! The software helps, though.

The Microsoft software estimates joint locations by matching a random forest of decision trees against the depth image it perceives. Rather than going into the details, let me just say that the Kinect was effectively trained to locate joints better in two dimensions than three. And the approach taken will never be accurate with baggy clothing.

The Kinect is a reliable device, but it is also cumbersome and power hungry. More importantly, from the standpoint of perception, it does not have the resolution or accuracy of devices such as Intel’s RealSense camera which is cheaper and doesn’t require a power adapter. Also, Microsoft is no longer manufacturing the Kinect.

To use the RealSense we had to find or write our own skeleton tracking / joint position estimation software. After an extensive review of the literature and evaluation of many pieces of software, I decided to write it as described further below. First, a few comments. Convolutional neural nets (CNN) do a marvelous job in 2 dimensions using only image data (no depth). We will integrate their estimates with the approach and work on 3-dimensional versions that incorporate depth information, whether from the RealSense or stereo cameras without depth (the RealSense is a stereo camera that uses an infrared laser to “help”).

Skipping over a lot of research, we decided to focus on two things: a simple geometric model of an articulating body and a much more detailed polygonal mesh covering a model of the body. The geometric model consists of spheres and sections of cones or cylinders that articulate at joints. The mesh model has thousands of polygons each with a surface normal that is oriented according to a linear model. The linear equation is based on principal components of how the mesh best fits a number of different scans of people in various but mostly standing poses. The geometric model is astonishingly fast and useful for getting pose information and joint positions. The latter model gives more accurate joint positions (not to mention body parameters).

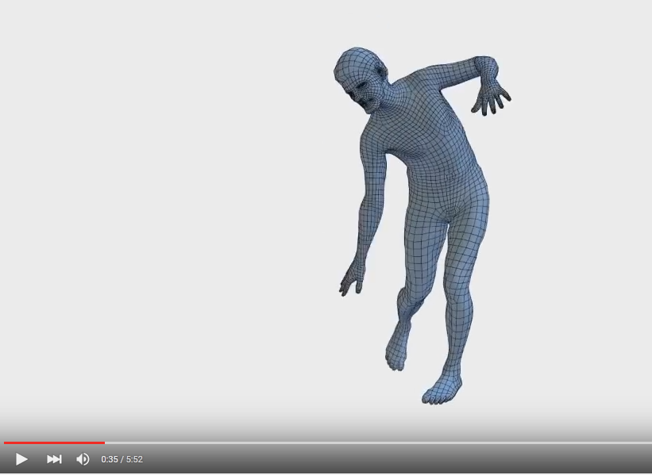

The following picture may give you an idea of how such models “fits” various body types:

Each of these articulating approaches performs more robustly than the Microsoft approach for loosely clothed bodies.

The articulating geometric model is too easy to go into, so let’s talk a little more about the body mesh approach.

There are a few ways to go about this. You can obtain high resolution scans of people from various places or you can obtain your own using Kinect Fusion. Next, you need a body mesh with appropriate details where you care to have them. You can take your point cloud data and get a variety of such meshes or you can use a mesh such as you might find in animation software. Then you fit the mesh to each of your scans. Then you compute at least a dozen to perhaps a hundred principal components.

Once you have the principal components you have an optimization problem. The basic idea is to find the body model parameters that best fit the depth map you obtain from the Kinect or RealSense or other stereoscopic camera setup.

There are many details to be considered, but the first is pose. For example, if you can assume the person is standing facing the camera, it’s a lot easier to adjust the parameters to the data. Pose includes joint angles and bone lengths, which are most of the parameters in an articulating geometric model. So, the first thing you can do is use the simple model to estimate those parameters or fit the body mesh while only optimizing on the pose parameters (holding the body type parameters constant).

Once you have the articulating geometry fit, you can continue to estimate the body model and, if you want the best results, repeat until changes to the parameters quiesce (among other cutoff criteria). The estimation itself seeks to minimize error. One way of characterizing the error minimization problem is least mean squared error where the error is the difference between the depth map perceived and the surface of the body model represented by the mesh. Each iteration seeks to reduce the error by adjusting the parameters. This is typically done using some form of gradient descent. We use a popular, open-source machine learning package to do this job.

Here’s an example of how the approach can perform, although we do not take exactly the same approach:

I would like to go into some of the other aspects, such as how we learn the efficacy of exercises for various postures but that’s all for now!

One Reply to “Artificially Intelligent Physical Therapy”

Comments are closed.