Marketing at Coherent Knowledge suggests this Dilbert for those with compliance challenges!

Neat vs. Scruffy and Watson

Recently, John Sowa has commented on LinkedIn or in correspondence with some of us at Coherent Knowledge Systems on the old adage due to Shanks concerning the Neats. vs. the Scruffies. The Neats want nice formal logics as the basis of artificial intelligence. This includes anyone who prefers classical logic (e.g., Common Logic, RIF-BLD, or SBVR) or standard ontologies (e.g., OWL-DL) for representing knowledge and reasoning with it. The Scruffies may use well-defined technology, but are not constrained by it. They’ll do whatever they think works, now, whether or not it is a good long term solution and despite its shortcomings, as long as it can obtain immediate objectives.

Watson is scruffy. It doesn’t try to understand or formally represent knowledge. It combines a lot of effective technologies into an evidentiary framework that allows it to effectively “guess”.

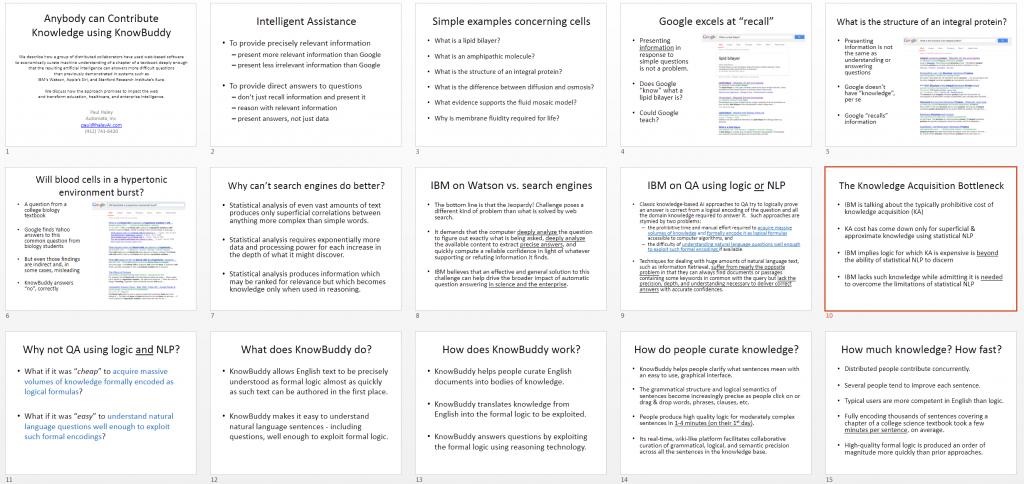

Today, in response to continued discussion in the Natural Language Processing group on LinkedIn under the topic “This is Watson”, I’m posting the following presentation on Project Sherlock and the Linguist vs. Google and IBM.

Essentially, the neat approach is more viable today than ever. So, chalk one up for the neats, including Dr. Sowa and Menno Mofait’s comment in that discussion.

During a presentation at CMU after winning the game show,, IBM admitted that in order to get the last leg of improvement needed to win Jeopardy!, they needed to do some “neat” ontological knowledge acquisition, too!

Confessions of a production rule vendor (part 1)

If you are using one of the more popular rules engines, chances are you can blame me. I popularized the technology of forward-chaining production rules based on the Rete Algorithm. Others have certainly contributed; my path is the one that led to open-source implementations and many commercial products, including those of IBM, Oracle, SAP, TIBCO, Red Hat, and too many others to mention (e.g., see this).

Today, I want to make clear that the future prospects for production rule technology are diminishing. My objective here is to explain why most rule-based technologies are no good and why some are much better. Although production rule technology is much better than most rule-based technologies, I hope to also make clear that in the age of IBM’s Watson, Google’s Brain, and the semantic web, production rule technology is inadequate.

They are not created equal.

Rules have become so pervasive in the software business that vendors of all types of software say they have them. Consider, for example, that even Microsoft Outlook has rules!

Continue reading “Confessions of a production rule vendor (part 1)”

Answers to Common Questions About AI

Well this is a blast from the past… I was working on “the next post” about how Watson, Google, and the semantic web threaten the major BRMS systems when I found myself rewriting that what matters about Rete Algorithm-based production rule systems is that the order of the rules does not matter. It occurred to me that I must have written that a dozen times or more in my career so I did a Google and found this old paper has been on-line at the Free Library On-Line since 2006! I recall writing this in the early nineties and the owner of an AI magazine telling me he carried it with him for the better part of a year (and printing it in his magazine, I think). Some of you may enjoy reading it again!

Best,

Paul

1. WHAT IS AN EXPERT SYSTEM?

An expert system is a program that includes expertise.

2. WHAT IS EXPERTISE?

Expertise is knowledge that enables an individual, group or program to perform an intellectual task better than that task can be performed without the expertise.

3. HOW IS EXPERTISE ENCODED WITHIN A PROGRAM?

Expertise, being knowledge, falls into a few general categories.

Some knowledge is declarative knowledge which doesn’t do anything but which represents truth or state. Such knowledge can be stored in a standard database.

Some knowledge is algorithmic and can therefore be flow charted. Such knowledge can be expressed as a routine in any procedural programming language. Not everything a person or organization knows can be easily translated into data structures and flow charts, however.

4. SO FLOW-CHARTABLE ALGORITHMS SHOULD BE CODED PROCEDURALLY?

Yes, if the flow chart is actually produced, if it is not extraordinarily complex, and if there is no reason to believe that the flow chart is incorrect or incomplete. After all, procedural languages and flow charts are isomorphic, anything that can be expressed in one can be expressed using the other. However, if it is hard to produce a flow chart, it will be even more difficult to produce a working program using a procedural language.

Deep question answering: Watson vs. Aristotle

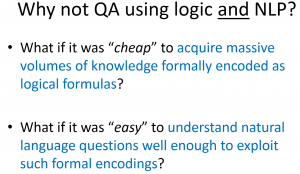

At the SemTech conference last week, a few companies asked me how to respond to IBM’s Watson given my involvement with rapid knowledge acquisition for deep question answering at Vulcan. My answer varies with whether there is any subject matter focus, but essentially involves extending their approach with deeper knowledge and more emphasis on logical in additional to textual entailment.

Today, in a discussion on the LinkedIn NLP group, there was some interest in finding more technical details about Watson. A year ago, IBM published the most technical details to date about Watson in the IBM Journal of Research and Development. Most of those journal articles are available for free on the web. For convenience, here are my bookmarks to them.

- Question analysis: How Watson reads a clue

- Deep parsing in Watson

Good technical details on the two parsing approaches taken. Using deep parsing, such as we have (e.g., using the ERG in Project Sherlock) and disambiguation is a viable approach for the background knowledge that IBM dismisses too quickly, however (see below). Note that in order to train NLP systems in new domains, you have to go through the same process for thousands of sentences, so disambiguation technology as in the Linguist is appropriate even if proofs are based more on textual entailment than logical deduction. - Textual resource acquisition and engineering

- Automatic knowledge extraction from documents

- Finding needles in the haystack: Search and candidate generation

- Typing candidate answers using type coercion

- Textual evidence gathering and analysis

- Relation extraction and scoring in DeepQA

- Structured data and inference in DeepQA

IBM takes a hard line against deep knowledge here. They have the upper hand in the argument due to their impressive results, but more precise knowledge would only improve their performance. You can find more on this debate in the Deep QA FAQ and this presentation which includes the the followings slide:

Continue reading “Deep question answering: Watson vs. Aristotle”

Continue reading “Deep question answering: Watson vs. Aristotle”

Financial industry to define standards using defeasible logic and semantic web technologies

Last week, I attended the FIBO (Financial Business Industry Ontology) Technology Summit along with 60 others.

The effort is building an ontology of fundamental concepts in the financial services. As part of the effort, there is surprisingly clear understanding that for the resulting representation to be useful, there is a need for logical and rule-based functionality that does not fit within OWL (the web ontology language standard) or SWRL (a simple semantic web rule language). In discussing how to meet the reasoning and information processing needs of consumers of FIBO, there was surprisingly rapid agreement that the functionality of Flora-2 was most promising for use in defining and exemplifying the use of the emerging standard. Endorsers including Benjamin Grosof and myself, along with a team from SRI International. Others had a number of excellent questions, such as concerning open- vs. closed-world semantics, which are addressed by support for the well-founded semantics in Flora-2 and XSB.

Thanks go to Vulcan for making the improvements to Flora and XSB that have been developed in Project Halo available to all!

Pedagogical applications of proofs of answers to questions

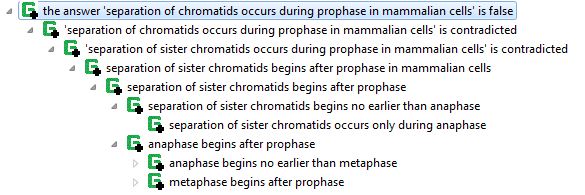

In Vulcan’s Project Halo, we developed means of extracting the structure of logical proofs that answer advanced placement (AP) questions in biology. For example, the following shows a proof that separation of chromatids occurs during prophase.

This explanation was generated using capabilities of SILK built on those described in A SILK Graphical UI for Defeasible Reasoning, with a Biology Causal Process Example. That paper gives more details on how the proof structures of questions answered in Project Sherlock are available for enhancing the suggested questions of Inquire (which is described in this post, which includes further references). SILK justifications are produced using a number of higher-order axioms expressed using Flora‘s higher-order logic syntax, HiLog. These meta rules determine which logical axioms can or do result in a literal. (A literal is an positive or negative atomic formula, such as a fact, which can be true, false, or unknown. Something is unknown if it is not proven as true or false. For more details, you can read about the well-founded semantics, which is supported by XSB. Flora is implemented in XSB.)

Now how does all this relate to pedagogy in future derivatives of electronic learning software or textbooks, such as Inquire?

Well, here’s a use case: Continue reading “Pedagogical applications of proofs of answers to questions”

Acquring Rich Logical Knowledge from Text (Semantic Technology 2013)

As noted in prior posts about Project Sherlock, we have acquired knowledge from a biology textbook to build the business case for applications like Inquire. We reported our results at SemTech recently. The slides are available here.

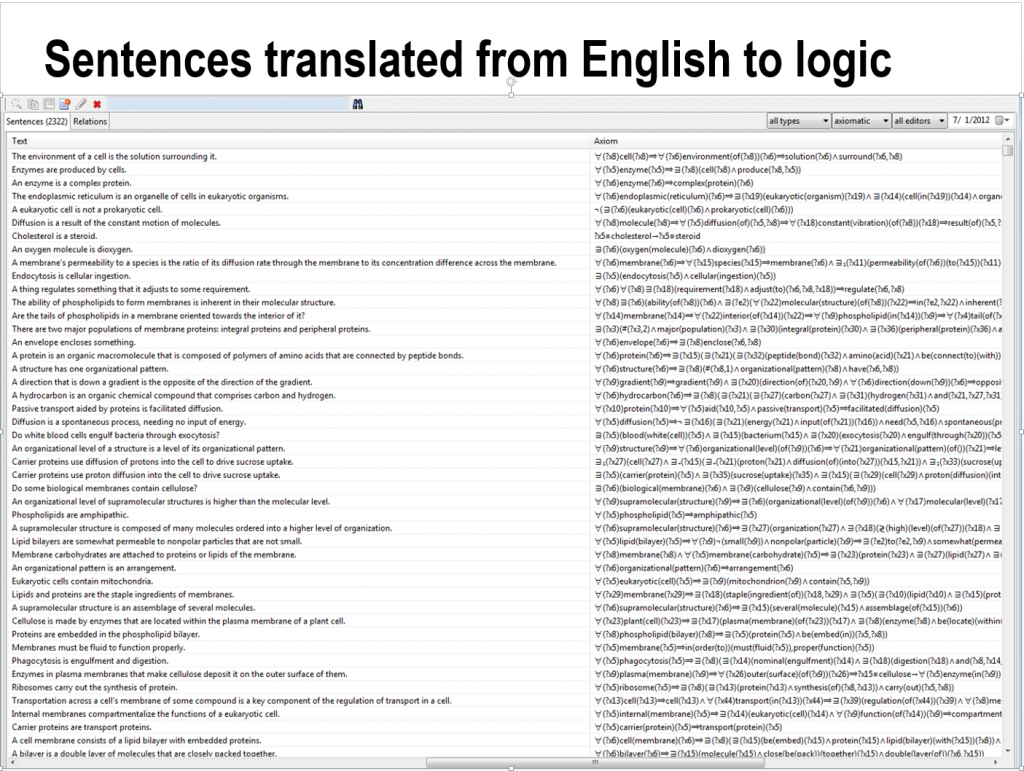

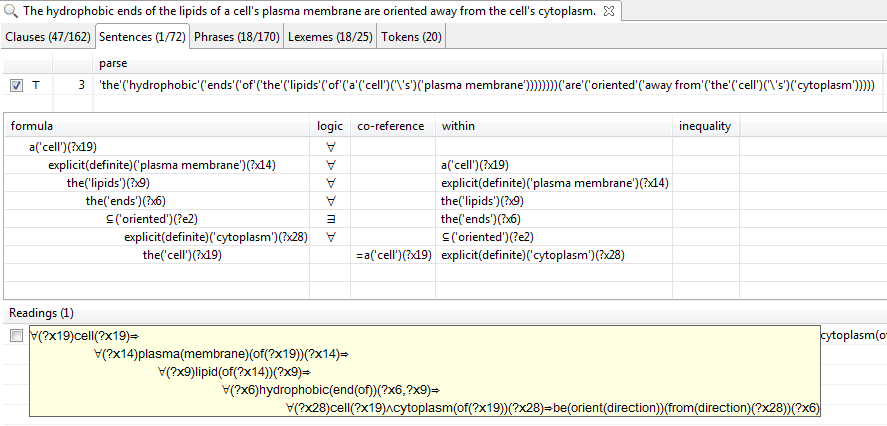

Translating English into Logic using the Linguist

Now that the patent filings are done, we can discuss and show more about the Linguist…

The following link is a video that shows a sentence from Project Sherlock being translated from English into first-order logic using the patent-pending Linguisttm software.

This video was recorded in October, 2012. More recent versions of the Linguist can render the logic in more ways, such as shown below:

Background for our Semantic Technology 2013 presentation

In the spring of 2012, Vulcan engaged Automata for a knowledge acquisition (KA) experiment. This article provides background on the context of that experiment and what the results portend for artificial intelligence applications, especially in the areas of education. Vulcan presented some of the award-winning work referenced here at an AI conference, including a demonstration of the electronic textbook discussed below. There is a video of that presentation here. The introductory remarks are interesting but not pertinent to this article.

Background on Vulcan’s Project Halo

From 2002 to 2004, Vulcan developed a Halo Pilot that could correctly answer between 30% and 50% of the questions on advanced placement (AP) tests in chemistry. The approaches relied on sophisticated approaches to formal knowledge representation and expert knowledge engineering. Of three teams, Cycorp fared the worst and SRI fared the best in this competition. SRI’s system performed at the level of scoring a 3 on the AP, which corresponds to earning course credit at many universities. The consensus view at that time was that achieving a score of 4 on the AP was feasible with limited additional effort. However, the cost per page for this level of performance was roughly $10,000, which needed to be reduced significantly before Vulcan’s objective of a Digital Aristotle could be considered viable.

Continue reading “Background for our Semantic Technology 2013 presentation”