Educational technology for personalized or adaptive learning is based on various types of pedagogical models.

Google provides the following info-box and link concerning pedagogical models:

- As described in this paper, pedagogical models are cognitive models or theoretical constructs derived from knowledge acquisition models or views about cognition and knowledge, which form the basis for learning theory.

- Pedagogical models for E-Learning: A theory-based design framework

Many companies have different names for their models, including:

- Knewton: Knowledge Graph

(partially described in How do Knewton recommendations work in Pearson MyLab) - Kahn Academy: Knowledge Map

- Declara: Cognitive Graph

The term “knowledge graph” is particularly common, including Google’s (link).

There are some serious problems with these names and some varying limitations in their pedagogical efficacy. There is nothing cognitive about Declara’s graph, for example. Like the others it may organize “concepts” (i.e., nodes) by certain relationships (i.e., links) that pertain to learning, but none of these graphs purports to represent knowledge other than superficially for limited purposes.

- Each of Google’s, Knewton’s, and Declara’s graphs are far from sufficient to represent the knowledge and cognitive skills expected of a masterful student.

- Each of them is also far from sufficient to represent the knowledge of learning objectives and instructional techniques expected of a proficient instructor.

Nonetheless, such graphs are critical to active e-learning technology, even if they fall short of our ambitious hope to dramatically improve learning and learning outcomes.

The most critical ingredients of these so-called “knowledge” or “cognitive” graphs include the following:

- learning objectives

- educational resources, including instructional and formative or summative assessment items

- relationships between educational resources and learning objectives (i.e., what they instruct and/or assess)

- relationships between learning objectives (e.g., dependencies such as prerequisites)

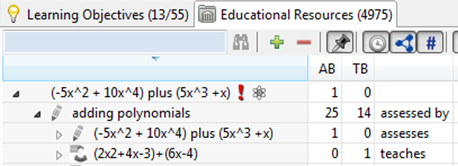

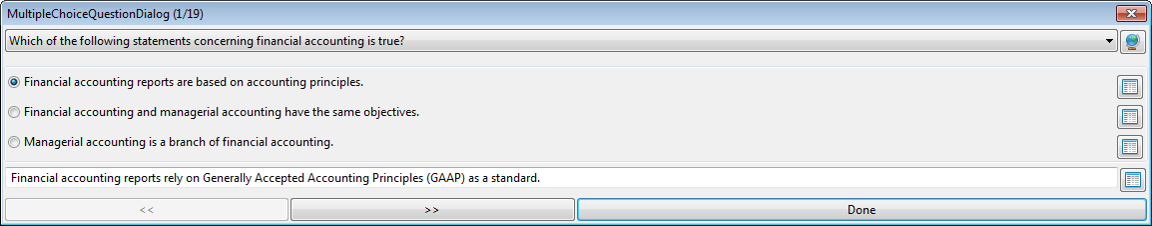

The following user interface supports curation of the alignment of educational resources and learning objectives, for example:

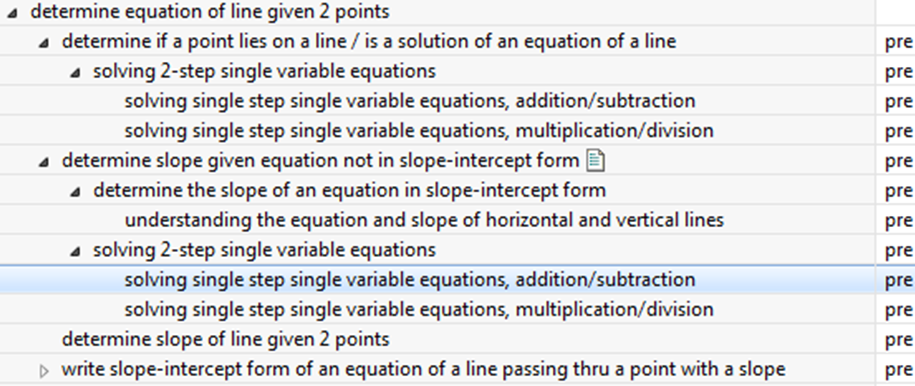

And the following supports curation of the dependencies between learning objectives (as in a prerequisite graph):

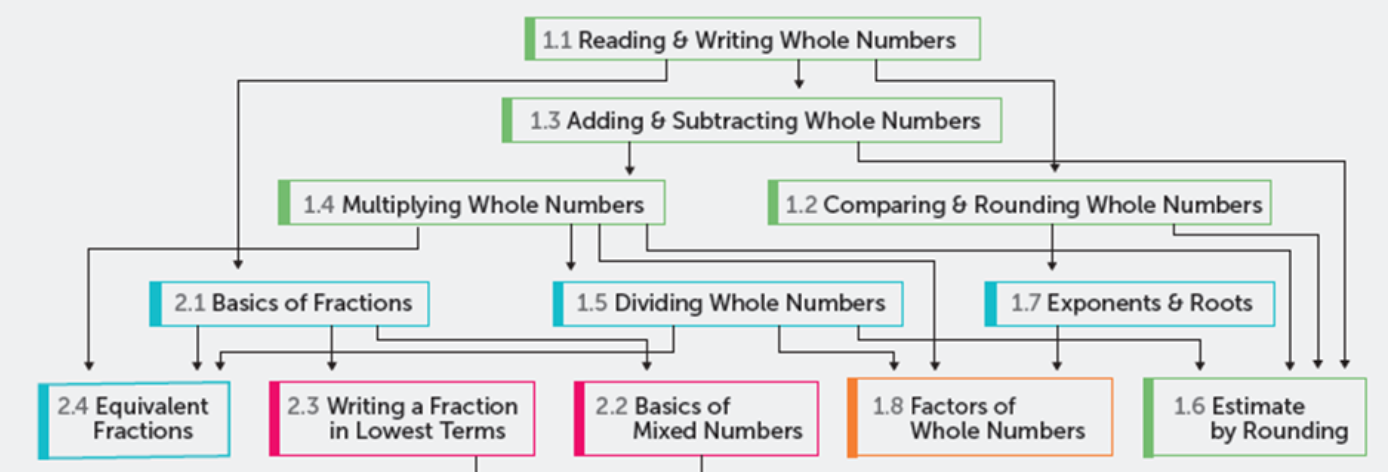

Here is a presentation of similar dependencies from Kahn Academy:

And here is a depiction of such dependencies in Pearson’s use of Knewton within MyLab (cited above):

Of course there is much more that belongs in a pedagogical model, but let’s look at the fundamentals and their limitations before diving too deeply.

Prerequisite Graphs

The link on Knewton recommendations cited above includes a graphic showing some of the learning objectives and their dependencies concerning arithmetic. The labels of these learning objectives include:

- reading & writing whole numbers

- adding & subtracting whole numbers

- multiplying whole numbers

- comparing & rounding whole numbers

- dividing whole numbers

And more:

- basics of fractions

- exponents & roots

- basics of mixed numbers

- factors of whole numbers

But:

- There is nothing in the “knowledge graph” that represents the semantics (i.e., meaning) of “number”, “fraction”, “exponent”, or “root”.

- There is nothing in the “knowledge graph” that represents what distinguishes whole from mixed numbers (or even that fractions are numbers).

- There is nothing in the “knowledge graph” that represents what it means to “read”, “write”, “multiply”, “compare”, “round”, or “divide”.

Graphs, Ontology, Logic, and Knowledge

Because systems with knowledge or cognitive graphs lack such representation, they suffer from several problems, including the following, which are of immediate concern:

- dependencies between learning objectives must be explicitly established by people, thereby increasing the time, effort, and cost of developing active learning solutions, or

- learning objectives that are not explicitly dependent may become dependent as evidence indicates, which requires exponentially increasing data as the number of learning objectives increases, thereby offering low initial and asymptotic efficacy versus more intelligent and knowledge-based approaches

For example, more advanced semantic technology standards (e.g., OWL and/or SBVR or defeasible modal logic) can represent that digits are integers are numbers and an integer divided by another is a fraction. Consequently, a knowledge-based system can infer that learning objectives involving fractions depend on some learning objectives involving integers. Such deductions can inform machine learning such that better dependencies are induced (initially and asymptotically) and can increase the return on investment of human intelligence in a cognitive computing approach to pedagogical modeling.

As another example, consider that adaptive educational technology either knows or do not know that multiplication of one integer by another is equivalent to computing the sum of one the other number of times. Similarly, they either know or they do not know how multiplication and division are related. How effectively can the improve learning if they do not know? How much more work is required to get such systems to achieve acceptable efficacy without such knowledge? Would you want your child instructed by someone who was incapable of understanding and applying such knowledge?

Semantics of Concepts, Cognitive Skills, and Learning Objectives

Consider again the labels of the nodes in Knewton/Pearson’s prerequisite graph listed above. Notice that:

- the first group of labels are all sentences while the second group are all noun phrases

- the first group (of sentences) are cognitive skills more than they are learning objectives

- i.e., they don’t specify a degree of proficiency, although one may be implicit with regard to the educational resources aligned with those sentences

- the second group (of noun phrases) refer to concepts (or, implicitly, sentences that begin with “understanding”)

- the second group (of noun phrases) that begin with “basics” are unclear learning objectives or references to concepts

For adaptive educational technology that does not “know” what these labels mean nor anything about the meanings of the words that occur in them, the issues noted above may not seem important but they clearly limit the utility and efficacy of such graphs.

Taking a cognitive computing approach, human intelligence helps artificial intelligence understand these sentences and phrases deeply and precisely. A cognitive computing approach also results in artificial intelligence that deeply and precisely understands many additional sentences of knowledge that don’ fit into such graphs.

For example, the system comes to know that reading and writing whole numbers is a conjunction of finer grained learning objectives and that, in general, reading is a prerequisite to writing. It comes to know that whole numbers are non-negative integers which are typically positive. It comes to know that subtraction is the inverse of addition (which implies some dependency relationship between addition and subtraction). In order to understand exponents, the system is told and learns about raising numbers to powers and about what it means to square a number. The system is told and learns about roots how they relate to exponents and powers, including how square roots relate to squaring numbers. The system is told that a mixed number is an integer and proper fraction corresponding to an improper fraction.

Adaptive educational technology either understands such things or it does not. If it does not, human beings will have to work much harder to achieve a system with a given level of efficacy and subsequent machine learning will take a longer time to reach a lower asymptote of efficacy.