Although a bit of a stretch, this post was inspired by the following blog post, which talks about the Facebook API in terms of learning styles. If you’re interested in such things, you are probably also aware of learning record stores and things like the Tin Can API. You need these things if you’re supporting e-learning across devices, for example…

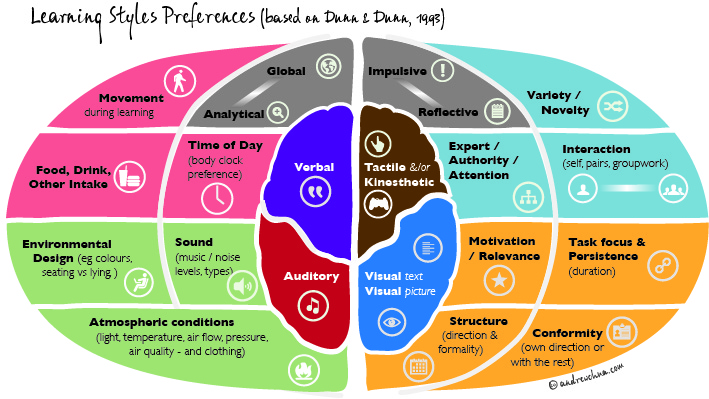

Really, though, I was looking for information like the following nice rendering from Andrew Chua:

There’s a lot of hype in e-learning about what it means to “personalize” learning. You hear people say that their engagements (or recommendations for same) take into account individuals’ learning styles. Well…

There are various models of how people learn differently or what can affect learning that are somewhat conflated in the notion of “learning styles”. There are various models of how people differ with regard to their style of learning including:

- Myers-Briggs

- Gresha-Reichman

- Felder-Silverman

- Kolb

Here’s a great background paper on these and another discussed below:

-

Coffield, Frank, et al. “Learning styles and pedagogy in post-16 learning: A systematic and critical review.” (2004).

Among the various dimensions of these models are:

- social aspects, like how cooperative or competitive one tends to be

- whether one tends to learn independently or depending on an instructor

- whether one is more or less introverted or extroverted

- how well one learns visually versus aurally (or verbally, such as in reading vs. listening or speaking)

- notions of how one perceives or processes concretely or abstractly, actively or reflectively, intuitively or …

- whether one makes decisions or judgements deliberatively or intuitively

- whether one comprehends in a sequential or bottom-up versus global or top-down manner

In the context of social e-learning, some of these are clearly useful. In the context of deciding what problems to recommend or where in a textbook or on the web to direct a learner, the jury is out. For details, see the excellent critical comments on Wikipedia, including:

- Despite a large and evolving research programme, forceful claims made for impact are questionable because of limitations in many of the supporting studies and the lack of independent research on the model.

- At present, there is no adequate evidence base to justify incorporating learning styles assessments into general educational practice.

So we have to be careful buying the hype that learning styles will move the accelerometer needle when it comes to e-learning. But there’s another model of learning styles that is more interesting: Dunn & Dunn.

Dunn & Dunn have done an excellent job of providing a framework that is ready for use in e-learning. Some of the other models give us hints about how to characterize and distinguish learners by personality or cognitive traits. Dunn & Dunn gives more on what can affect learning without being as concerned with personality. At least that’s what I see and like about the framework.

There is a lot of information that could be available for personalizing learning beyond the “knowledge state” that was introduced by the folks behind ALEKS (now part of McGraw-Hill). The idea of a “knowledge state” is, essentially, a cluster in an n-dimensional state of mastery where each dimension denotes a level of mastery of some learning objective or concept. Personalizing learning by recommending what maximizes improvement for people in such a cluster is the guts of the big-data approach to adaptive educational technology. Learning styles are an after thought, for the most part, and far less efficacious, at least given the criticism discussed above.

But… ignoring the kinds of information that Dunn & Dunn have mapped out is just plain dumb. But, without fixating on Dunn & Dunn per se, let’s just consider information that could improve an e-learning offering:

- facial orientation – the laptops, tablets and smartphones on which e-learning software runs can see your face, recognize you, and tell when you are facing the device. If you’re constantly looking away, your learning will probably be correlated.

- eye movement – my smartphone scrolls depending on where I look. If I’m staring at the same spot on the screen, I might be tired or daydreaming. Recommending text at a high reading level might not be a good idea right now.

- saccades – if you’re eye is moving around while your face is pointed at the screen, you’re engaged!

- when people talk about saccades they’re generally talking about rapid eye movements, but the term can refer to other rapid body movements, which an accelerometer can pick up if it isn’t sitting on a desk.

- if you jump, it might be worth knowing that you might have been distracted, or if you’re foot is twitching while you’re reading on your tablet or smartphone, should the e-learning system take that into consideration?

- you could also view information from touch sensors as providing saccade information (aside from user interface activity, such as clicking, dragging, scrolling, or scaling)

- blinking – if you’re not doing it you’re either thinking hard or tired or hungry or… if you are blinking a lot, you’re probably thinking and perhaps challenged (i.e., stumped or confused)

- posture – if you’re tablet’s in your lap or your hands, the accelerometer and gravitational sensors can give useful information

- listening to a lecture while you’re walking is better than recommending a journal article

- are you lying on the floor, sitting at a desk, or reclining on a sofa? Do you learn better from different types of resources in those circumstances?

- location – are you in class, at the library, or at home?

- Maybe it doesn’t matter which, but we can cluster sensor data into modes of engagement and learn how to teach you better.

- time of day is missing from other learning style models; Dunn & Dunn have it

- media might be a better recommendation when there’s a little motion while your reclining in the evening.

- ambient light and noise information can indicate that media may or may not be preferable at the moment

- what if the noise is mostly one or two voices?

- temperature, humidity, and pressure are available in many cases…

A lot of this is distinguishing learning style from mode of engagement. Mode of engagement matters, perhaps more than personality-based learning styles. And it can distinguish a good mobile e-learning solution from a dumb one.

We’re working on a service-oriented architecture that takes this kind of information in to a learning record store. Tabtor has been one recent inspiration. Their use of digital paper is great. Other great ideas are more than welcome!