Although a bit of a stretch, this post was inspired by the following blog post, which talks about the Facebook API in terms of learning styles. If you’re interested in such things, you are probably also aware of learning record stores and things like the Tin Can API. You need these things if you’re supporting e-learning across devices, for example…

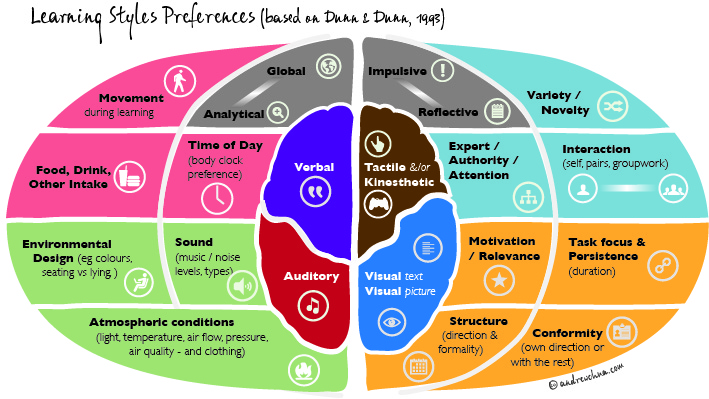

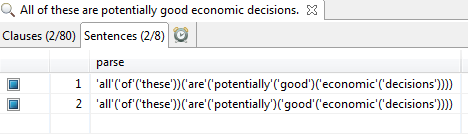

Really, though, I was looking for information like the following nice rendering from Andrew Chua:

There’s a lot of hype in e-learning about what it means to “personalize” learning. You hear people say that their engagements (or recommendations for same) take into account individuals’ learning styles. Well…