Many users land up wanting to import sentences in the Linguist rather than type or paste them in one at a time. One way to do this is to right click on a group within the Linguist and import a file of sentences, one per line, into that group. But if you want to import a large document and retain its outline structure, some application-specific use of the Linguist APIs may be the way to go.

Iterative Disambiguation

In a prior post we showed how extraordinarily ambiguous, long sentences can be precisely interpreted. Here we take a simpler look upon request.

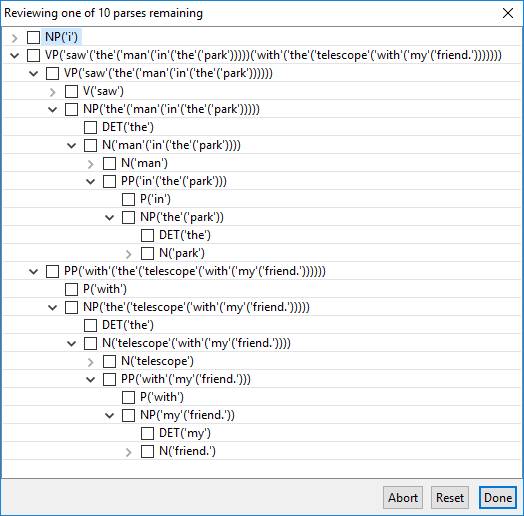

Let’s take a sentence that has more than 10 parses and configure the software to disambiguate among no more than 10.

Once again, this is a trivial sentence to disambiguate in seconds without iterative parsing!

The immediate results might present:

Suppose the intent is not that the telescope is with my friend, so veto “telescope with my friend” with a right-click.

“I don’t own a TV set. I would watch it.”

Are vitamins subject to sales tax in California?

What is the part of speech of “subject” in the sentence:

- Are vitamins subject to sales tax in California?

Related questions might include:

- Does California subject vitamins to sales tax?

- Does California sales tax apply to vitamins?

- Does California tax vitamins?

Vitamins is the direct object of the verb in each of these sentences, so, perhaps you would think “subject” is a verb in the subject sentence…

Continue reading “Are vitamins subject to sales tax in California?”

Dictionary Knowledge Acquisition

The following is motivated by Section 6359 of the California Sales and Use Tax. It demonstrates how knowledge can be acquired from dictionary definitions:

Here, we’ve taken a definition from WordNet and prefixed it with the word followed by a colon and parsed it using the Linguist.

‘believed by many’

A Linguist user recently had a question about part of a sentence that boiled down to something like the following:

- It is believed by many.

The question was whether “many” was an adjective, cardinality, or noun in this sentence. It’s a reasonable question!

Parsing Winograd Challenges

The Winograd Challenge is an alternative to the Turing Test for assessing artificial intelligence. The essence of the test involves resolving pronouns. To date, systems have not fared well on the test for several reasons. There are 3 that come to mind:

- The natural language processing involved in the word problems is beyond the state of the art.

- Resolving many of the pronouns requires more common sense knowledge than state of the art systems possess.

- Resolving many of the problems requires pragmatic reasoning beyond the state of the art.

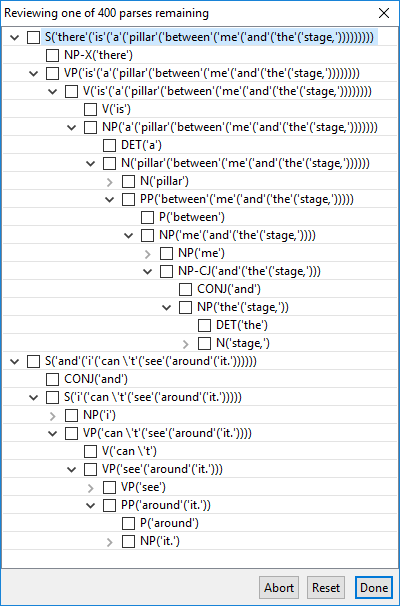

As an example, one of the simpler exemplary problems is:

- There is a pillar between me and the stage, and I can’t see around it.

A heuristic system (or a deep learning one) could infer that “it” does not refer to “me” or “I” and toss a coin between “pillar” and “stage”. A system worthy of the passing the Winograd Challenge should “know” it’s the pillar.

Even this simple sentence presents some NLP challenges that are easy to overlook. For example, does “between” modify the pillar or the verb “is”?

This is not much of a challenge, however, so let’s touch on some deeper issues and a more challenging problem…

Nominal semantics of ‘meaning’

Just a quick note about a natural language interpretation that came up for the following sentence:

- Under that test, the rental to an oil well driller of a “rock bit” having an effective life of but one rental is a transaction in lieu of a transfer of title within the meaning of (a) of this section.

The NLP system comes up with many hundreds of plausible parses for this sentence (mostly because it’s considering lexical and syntactic possibilities that are not semantically plausible). Among these is “meaning” as a nominalization.

From Wikipedia:

- In linguistics, nominalization is the use of a word which is not a noun (e.g. a verb, an adjective or an adverb) as a noun, or as the head of a noun phrase, with or without morphological transformation.

It’s quite common to use the present participle of a verb as a noun. In this case, Google comes up with this definition for the noun ‘meaning’:

- what is meant by a word, text, concept, or action.

The NLP system has a definition of “meaning” as a mass or count noun as well as definitions for several senses of the verb “mean”, such as these:

- intend to convey, indicate, or refer to (a particular thing or notion); signify.

- intend (something) to occur or be the case.

- have as a consequence or result.

Combinatorial ambiguity? No problem!

Working on translating some legal documentations (sales and use tax laws and regulations) into compliance logic, we came across the following sentence (and many more that are even worse):

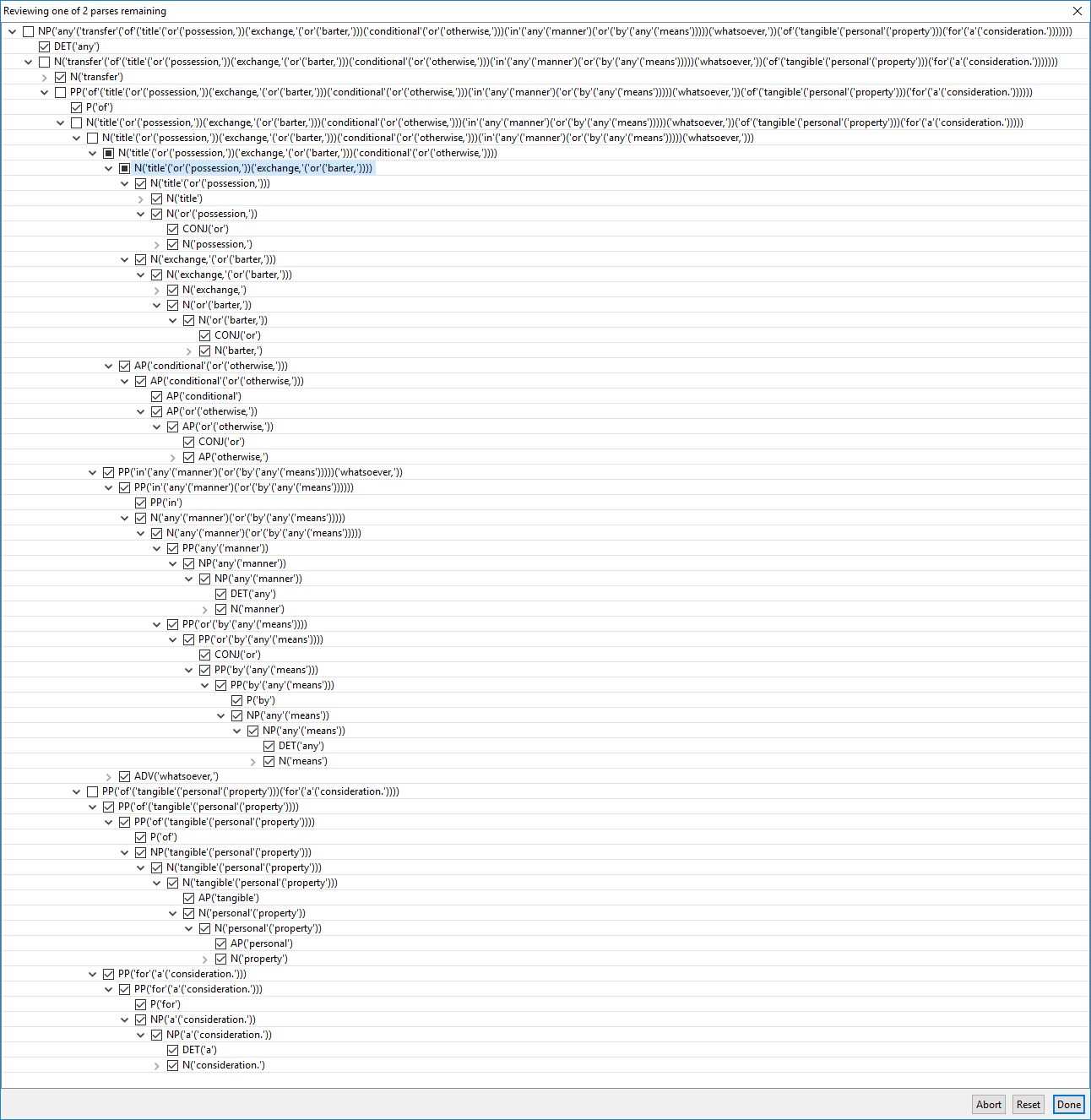

- Any transfer of title or possession, exchange, or barter, conditional or otherwise, in any manner or by any means whatsoever, of tangible personal property for a consideration.

Natural language processing systems choke on sentences like this because of such sentences’ combinatorial ambiguity and NLP’s typical lack of knowledge about what can be conjoined or complement or modify what.

This sentences has many thousands of possible parses. They involve what the scopes of each of the the ‘or’s are and what is modified by conditional, otherwise, or whatsoever and what is complemented by in, by, of, and for.

The following shows 2 parses remaining after we veto a number of mistakes and confirm some phrases from the 400 highest ranking parses (a few right or left clicks of the mouse):

Robust Inference and Slacker Semantics

In preparing for some natural language generation[1], I came across some work on natural logic[2][3] and reasoning by textual entailment[4] (RTE) by Richard Bergmair in his PhD at Cambridge:

The work he describes overlaps our approach to robust inference from the deep, variable-precision semantics that result from linguistic analysis and disambiguation using the English Resource Grammar (ERG) and the Linguist™.