In this article I hope you learn the future of predictive analytics in decision management and how tighter integration between rules and learning are being developed that will adaptively improve diagnostic capabilities, especially in maximizing profitability and detecting adversarial conduct, such as fraud, money laundering and terrorism.

Business Intelligence

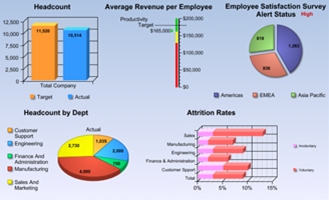

Visualizing business performance is obviously important, but improving business performance is even more important. A good view of operations, such as this nice dashboard[1], helps management see the forest (and, with good drill-down, some interesting trees).

With good visualization, management can gain insights into how to improve business processes, but if the view does include a focus on outcomes, improvement in operational decision making will be relatively slow in coming.

Whether or not you use business intelligence software to produce your reports or present dashboards, however, you can improve your operational decision management by applying statistics and other predictive analytic techniques to discover hidden correlations between what you know before a decision and what you learn afterwards to improve your decision making over time.

This has become known as decision management, thanks to Fair Isaac Corporation, but not until after they acquired Hecht Nielsen Corporation.

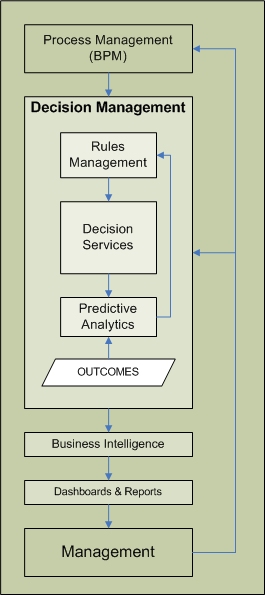

Enterprise Decision Management

HNC pioneered the use of predictive analytics to optimize decision making. Dr. Nielsen formed the company in 1986 to apply neural network technology to to predict fraud. The resulting application (perhaps it is more of a tool) is called Falcon. It works.

In 2002, Fair Isaac acquired HNC (for roughly $800,000,000 in stock) to pursue a “common strategic vision for the growth of the analytics and decision management technology market”. But shortly before the merger, HNC had acquired Blaze Software from Brokat for a song following the Dot Bomb of October, 2000 – a month before 9/11. This gave HNC not only great learning technology but, with a business rules management system (BRMS), the opportunity to play in broader business process management (BPM), including underwriting and rating (which is highly regulated), for example.

Of course, the business rules market has since become fairly mainstream and closely related to governance, risk and compliance (GRC), all of which were beyond the point decision making capabilities of either HNC or Fair Isaac before both these transactions.

Once Fair Isaac had predictive and rule technology under one roof, bright employees such as James Taylor, coined “Enterprise Decision Management”, or EDM for short.

Predictive Analytic Sweet Spots

Before it merged with Fair Isaac, HNC’s machine learning technology was successful (meaning it was saving tons of money, not just an application or two) in each of the following business to consumer (B2C) application areas:

- Credit card fraud

- Workmen’s compensation fraud

- Property and casualty fraud

- Medical insurance fraud

Clearly fraud, across insurance and financial services is a sweet spot for decision management. Today, that includes money laundering and, in general, any form of deceit, including adversarial forms, such as involving terrorism.

HNC also moved into retail and other B2C areas, including:

- Targeting direct marketing campaigns

- Customer relationship management (CRM)

- Inventory management

Some of the specific areas in marketing and CRM included:

- “Up-selling” (i.e., predicting who might buy something better – and more expensive)

- “Cross-selling” (i.e., predicting which customers might buy something else)

- Loyalty (e.g., customer retention and increasing share of wallet)

- Profitable customer acquisition (e.g., reducing “churn”)

The inventory applications included:

- Merchandizing and price optimization

- SKU-level forecasting, allocation and replenishment

Predictive Analytic Challenges

The principle problem with predictive analysis is the care and feeding of the neural network or the business intelligence software. This involves formulating models, running them against example input data given outcomes, and examining the results. For the most part, this is the province of statisticians or artificial intelligence folk.

A secondary challenge involves the gap between the output of a predictive model and the actual decision. A predictive model generally outputs a continuous score rather than a discrete decision. To make a decision, a threshold is generally applied to this score.

- Yes or no questions are answered by applying a threshold to a score produced by a formula or neural network to determine “true” or “false”.

- Multiple choice questions are answered using a predictive model per choice and choosing the one with the highest score.

- More complex decisions are answered as above using a predictive model that combines the scores produced by other predictive models.

In general, especially where decisions are governed by policy or regulation, predictive models and decision tables are combined with rules using one of the following approaches:

- More complex decisions are answered as above using predictive models that are selected by rules in compliance with governing policy or regulations.

- More complex decisions are answered using rules that consider the scores produced by predictive models in compliance with governing policy or regulations.

In general, governance, risk and compliance (GRC) requires rules in addition to any predictive models. Rules are also commonly used within or to select predictive models. And special cases and exceptions are common applications of rules in combination with predictive models.

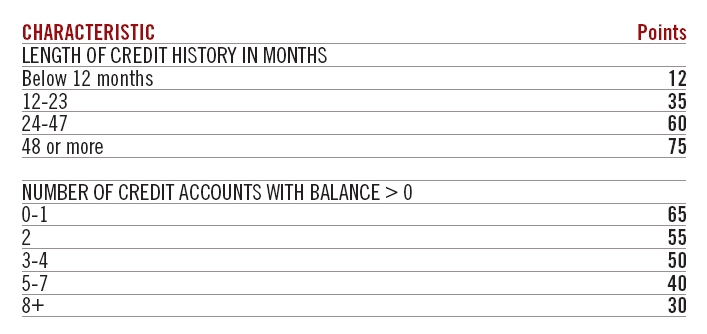

Scorecards

A simple case of defining (or combining) predictive models is a scorecard. The following example shows a scorecard from Fair Isaac’s nice brochure on predictive analytics that could be part of a credit worthiness score:

Fair Isaac is the leader in credit scoring, of course. Their FICO score is the output of a proprietary predictive model.

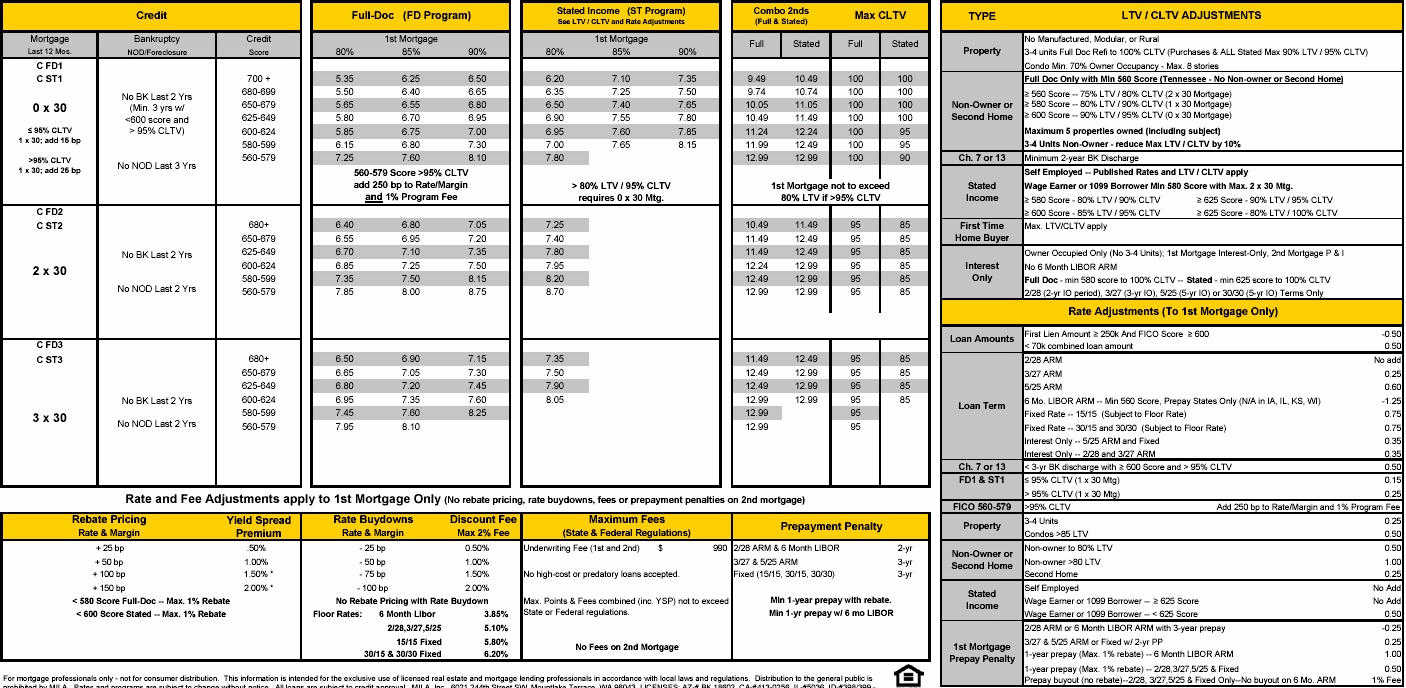

The following example shows how Fair Isaac’s predictive model is combined with other factors in the mortgage industry (click it for a closer look):

Note all the exceptions and special cases spread throughout this scorecard!

This explains why business rules have been so popular in the mortgage industry. Pre-qualifying and quoting across many lenders clearly requires a business rules approach (which explains why Gallagher Financial embedded my stuff in their software a decade ago). Even a single lender has to deal with its own special cases and the bigger the lender the more there are (which is why Countrywide Financial>[2] developed its own rules technology, called Merlin, decades ago).

Decision tables

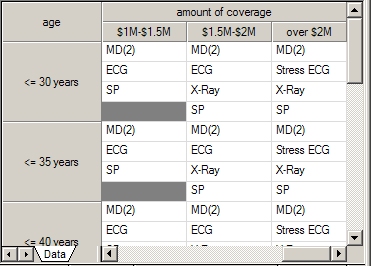

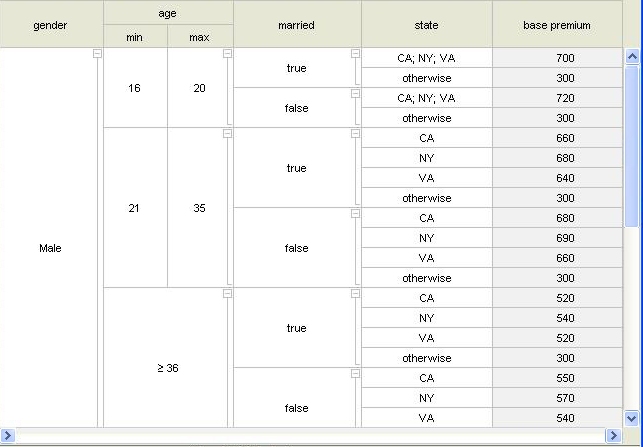

For anything but the simplest decisions, the results of predictive models are considered along with other data using rules to make decisions. In some cases, these rules are simple enough to fit into a decision table (or a decision tree rendered as a table) such as the following:

Tables like the one on the left can be used during underwriting to determine what variables are appropriate for gauging the risk of death covered by a life insurance policy. This demonstrates that rules (in this case, very simple rules) can be used to determine which predictive model (or inputs) to consider in a decision.

Tables like the one on the right correspond to decision trees and can be used instead of scorecards to set the base premium for auto insurance. Additional rules typically adjust for other factors like driver’s education classes, driving record, student drivers, and other special cases and exceptions. This is similar to the use of notes in the mortgage pricing sheet shown above.

The point is that real decisions are not as simple as a single predictive model, a scorecard, or a decision table. And once these decisions are defined and automated using any combination of these techniques, improving those decisions can seem overwhelming complex (just from a technical perspective!)

Predictive analytics is not enough for EDM

Enterprise Decision Management (EDM), discussed above, is all about this multi-dimensional decision technology environment (scorecards, decision tables, and rules) but also about bringing statistical and neural network technology in to improve the decision making process more easily and less manually or subjectively. The Fair Isaac brochure referenced above, for example, has some nice graphics showing statistical techniques (such as clustering) and graphs showing interconnected “nerves”.

There are several aspects of decision making that not even magically successful machine learning will eliminate, however:

- The requirement to comply with governing policies or regulations.

- Special cases that cannot be learned for various reasons, including:

- Limitations on the number of variables used in predictive analytics.

- Poorly understood, non-linear relationships in the data

- A lack of adequate sampling for special cases

- A need for certainty rather than probability

- Exceptions that cannot be learned, as with special cases.

Of course, special cases and exceptions are common in both policy and regulation. For examples, consider policies that arise from contracts or customer relationships or the evolutionary nature of legislation, as reflected in the article on the earned income tax credit.

Rules are not enough for EDM

On the other extreme, commercial rule technology has not been capable of adaptively improving decision management. In fact, except when they are modified by people, the use of rules in decision management is completely static, as well as entirely black and white. There is no learning with any of the business rules management systems from leaders like Fair Isaac, Ilog, Haley, Corticon, or Pegasystems.

Amazingly, there are no mainstream rule systems today that deal with probability or other kinds of uncertainty. Without such support, every rule in the tools from the vendors previously mentioned is black and white. This makes them very awkward for applications such as diagnosis. And all decision management applications, including profit maximization and all forms of fraud detection, are intrinsically diagnostic. Prediction results in probabilities!

The earliest diagnostic expert systems were developed at Stanford. One used subjective probabilities to diagnose bacterial infections. Another used more rigorous probabilities to find ore deposits (it more than paid for itself when it found a $100,000,000 molybdenum deposit circa 1990!). These applications were called MYCIN and PROSPECTOR, respectively.

This seems shocking really, since the technology of these systems is well-understood and technically almost trivial. The truth is that the Carnegie Mellon approach to business rules has won because it dealt with “the closed-world assumption”, which means that it could handle missing data better. But CMU’s approach was strictly black and white. Stanford was left in the dust commercially after the success of OPS5 at Digital Equipment Corporation and the commercialization of expert systems at Carnegie Group, Inference Corporation, IntelliCorp and Teknowledge left uncertainty in the dust during the mid-eighties. Neuron Data, which became Blaze, followed the same trail away from the uncertain toward the black and white of tightly governed and regulated decisions.

With nothing but black and white rules, EDM leaves it up to people to adapt the decisions. Sure they can use predictive analytics, but there is no closed loop from predictive analytics involving rules. Any new rules or any changes to rules follow the stand-alone business rules approach.

Innovate for Rewards with bounded Risk

One problem with black and white rules technology is that it forces you to be right. This stifles innovation. Ideally, you could formulate an idea and experiment with it at bounded risk. For example, you could say “what if we offered free checking to anyone who opens a new credit card account with us” and test it out. You don’t want to absorb the cost of thousands accepting your offer only to lose more on checking fees that you gain through credit card fees. So you indicate how often or how many such offers can be made.

Not surprisingly, this approach is tried-and-true. It’s most common form is the champion/challenger approach. Fair Isaac has been “championing” this approach for some time (see this from James Taylor).

But how do you close the loop? How do the rules learn when this new option should be used to maximize profit? The fact is, they don’t. People do it using predictive analytic techniques and manually refining the rules.

The problem, once again, is that the rules do not learn and that their outcomes are black and white. The rules do not offer a probability that this will be a profitable transaction. And they do not learn whether a transaction will be profitable over time, either. That’s up to the users of predictive technology and managers.

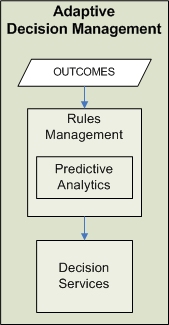

Adaptive Decision Management

Adaptive Decision Management (ADM) is the next step in EDM. In ADM the loop between predictive analytics and rules is closed. At a minimum this involves learning the probabilities or reliability of rules and their conclusions. This learning occurs using statistical or neural network techniques that can be trained, optimized, and tested off-line and – if your circumstances allow – even allowed to continue learning and adapting and optimizing while on-line. For example, advertising, promotional (e.g., pricing) and social applications almost always adapt continuously. Unfortunately, none of them use rules to do this yet, since the major players don’t support it!

Innovation and ADM

The adaptation of rule-based logic brings new flexibility and opportunity to the use of rules in decision management. Adding a black and white business rule requires complete certainty that the rule will result in only appropriate decisions. Of course, such certainty is a high hurdle. Adaptive rules have a relatively low hurdle.

With adaptive rules an innovative idea can be introduced with a low, or even a zero probability. As experience accumulates, the learning mechanism (again, statistical or neural) determines how reliable the rule is (i.e., how well it would have performed given outcomes). The technology can even learn how to weight and combine the conditions of rules so as to maximize their predictive accuracy. Without learning “inside a rule”, the probability of the rule as a whole may remain too low to be useful. And, unlike a black box neural network, the functions that combine conditions and the probabilities of rules are readily accessible, whether for insight or oversight.

The overall impact of adaptive rules is that you can put an idea into action within a generalized, probabilistic champion/challenger framework. And using techniques such as the subjective Bayesian method used in MYCIN or other more rigorous techniques as in PROSPECTOR, more patterns can be considered and leveraged with the continuously improving performance that EDM is all about.

The advantages of ADM include:

- the improving performance of EDM

- faster and more continuous improvement versus manual EDM

- a generalized approach to champion / challenger using probabilities

- better predictive performance than manually maintained scorecards or tables

- improved performance over black and white EDM by leveraging innovation adaptively

Although they haven’t told me about it explicitly, I would expect Fair Isaac to move in this direction first among the current leaders given their EDM focus. I would not be surprised to see business intelligence (BI) vendors, perhaps SAS, move in this direction, too. I know it will happen since we are already working with one commercial source of adaptive rules technology. Unfortunately, Automata is under NDA about their approach for now, but stay tuned… In the meantime, if you’re interested in learning more, please drop us a note at info at haleyAI.com. And if you see any issues or good applications, we would love to hear them.

[1]A nice dashboard from from Financial Services Technology (http://www.fsteurope.com/) using Corda (http://www.corda.com/)

[2]I recently helped Countrywide upgrade to our software, just as much for usability as performance improvements.

Great article! I have observed companies are doing rule analytics by developing their own solution. I belive one of the challenges for software vendors to add this capability is the data integration. We are still maturing the rule testing capability!

From technology perspective, I believe both Fair Isaac and ILog leveraged the object oriented movement. I’m not sure whether new data/knowledge technology such as RDF will bring us more flexibility and therefore change the current picture of the industry.

Look forward to seeing more insightful posts from you!

Timely and informative article, Paul. I would just add that when EDM deals with multiple alternatives not only Rules and Predictive Analytics are not enough, you also need CP (Constraint Programming) and other Optimization techniques to be included into the EDM suite.

Paul, excellent article and music to our ears! At Zementis, we have been pursuing the direction of “Adaptive Decision Technology” by combining predictive analytics and rules in our ADAPA decision engine.

They are, so to speak, the yin and yang of decisions, one representing explicit knowledge (rules) and the other one representing implicit knowledge which is “hidden” in your data and can be discovered by statistical algorithms.

I am sure we will see a broader adoption of predictive analytics as part of EDM (or ADM) as the industry makes it easier to deploy and manage such complex decision systems.