The title is in tribute to Raj Reddy‘s classic talk about how it’s hard to wreck a nice beach.

I came across interesting work on higher order and semantic dependency parsing today:

- Turning on the Turbo: Fast Third-Order Non-Projective Turbo Parsers.

- Priberam: A turbo semantic parser with second order features

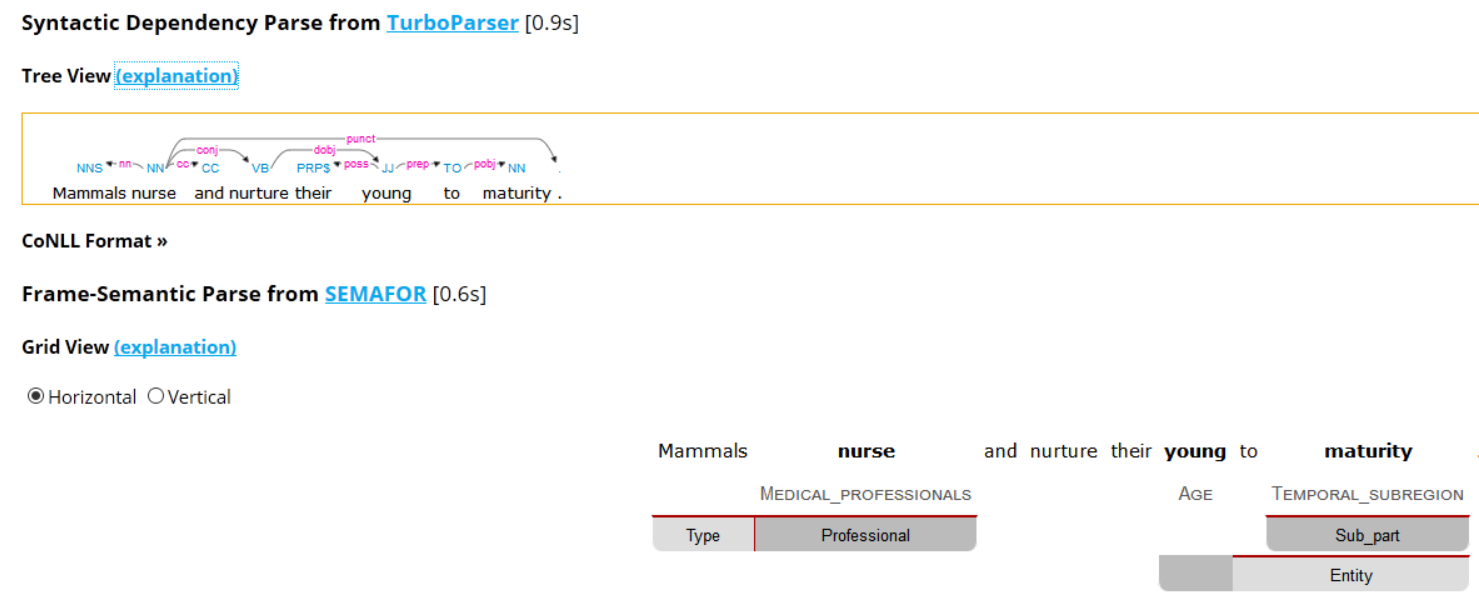

So I gave the software a try for the sentence I discussed in this post. The results discussed below were somewhat disappointing but not unexpected. So I tried a well know parser with similar results (also shown below).

There is no surprise here. Both parsers are marvels of machine learning and natural language processing technology. It’s just that understanding is far beyond the ken of even the best NLP. This may be obvious to some, but many are surprised given all the hype about Google and Watson and artificial intelligence or “deep learning” recently.

I was a little surprised that it missed several things, especially mistakes on the parts of speech of “mammals nursing”.

The semantics from FrameNet are obviously mistaken, but the mistake in what “to maturity” complements was also disappointing.

Of course, this is a statistical dependency parser, so the right parse might be somewhere down in the ranking. It just shows how hard it is to train such parsers.

The Stanford parser more or less defines the state of the broader art and has been thoroughly trained and tuned, so I thought it would not make such mistakes.

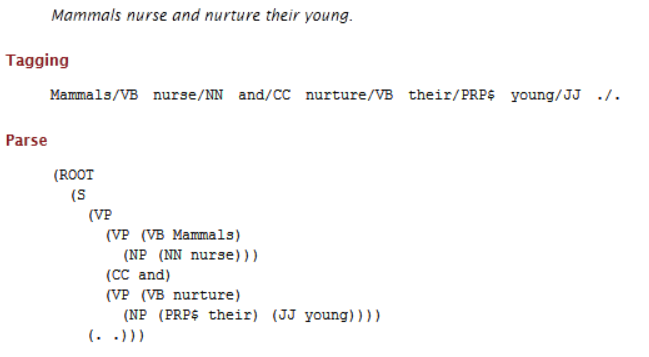

So I gave it a try on a simpler sentence after contemplating the results above.

I was even more surprised by this result, in which “mammals” is interpreted as a verb and “nurse” is interpreted as a noun!

And the interpretation of “young” is poor in each of the above results (it doesn’t make sense to “possess” an adjective).

The bottom line is that understanding English well enough to acquire useful logical knowledge requires a cognitive computing approach (i.e., both man and machine, not just the latter).

One Reply to “It’s hard to reckon nice English”

Comments are closed.