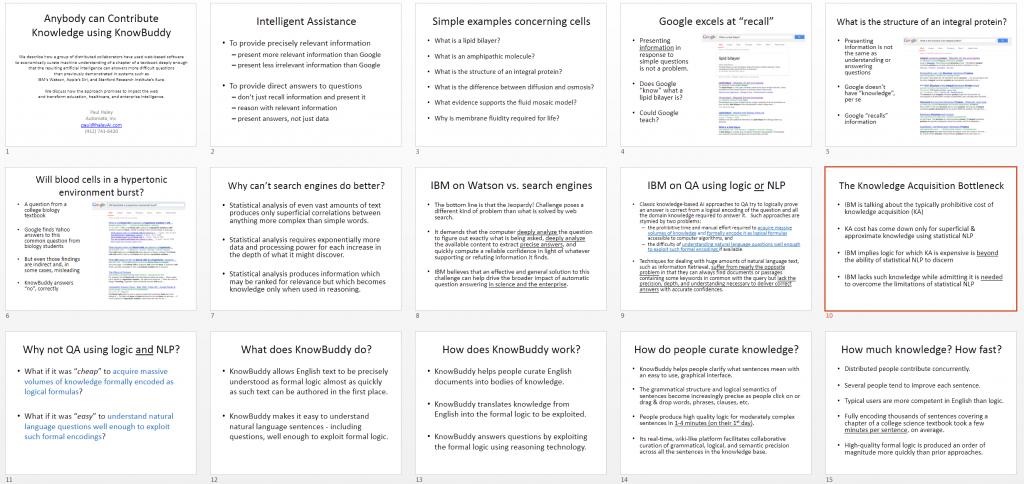

As I mentioned in this post, we’re having fun layering questions and answers with explanations on top of electronic textbook content.

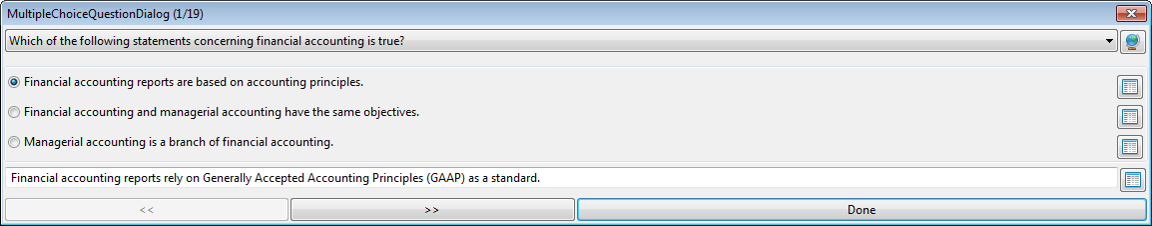

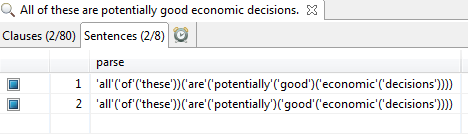

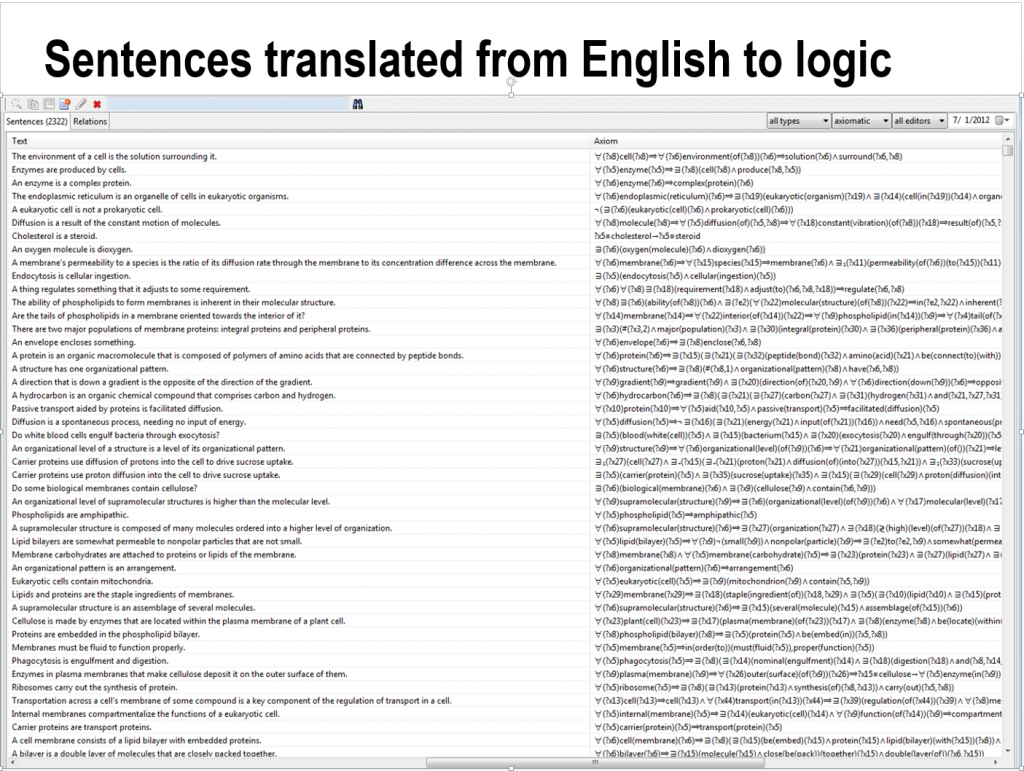

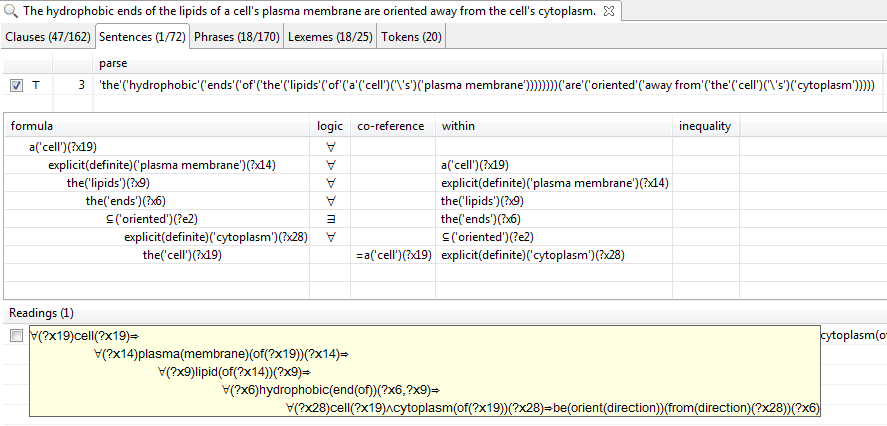

The basic idea is to couple a graph structure of questions, answers, and explanations into the text using semantics. The trick is to do that well and automatically enough that we can deliver effective adaptive learning support. This is analogous to the knowledge graph that users of Knewton‘s API create for their content. The difference is that we get the graph from the content, including the “assessment items” (that’s what educators call questions, among other things). Essentially, we parse the content, including the assessment items (i.e., the questions and each of their answers and explanations). The result of this parsing is, as we’ve described elsewhere, precise lexical, syntactic, semantic, and logic understanding of each sentence in the content. But we don’t have to go nearly that far to exceed the state of the art here. Continue reading “Automatic Knowledge Graphs for Assessment Items and Learning Objects”