Recently, we “read” ten thousand recipes or so from a cooking web site. The purpose of doing so was to produce a formal representation of those recipes for use in temporal reasoning by a robot.

Our task was to produce ontology by reading the recipes subject to conflicting goals. On the one hand, the ontology was to be accurate so that the robot could reason, plan, and answer questions robustly. On the other hand, the ontology was to be produced automatically (with minimal human effort).[1]

In order to minimize human effort while still obtaining deep parses from which we produce ontology, we used more techniques from statistical natural language processing than we typically do in knowledge acquisition for deep QA, compliance, or policy automation. (Consider that NLP typically achieves less than 90% syntactic accuracy while such work demands near 100% semantic accuracy.)[2]

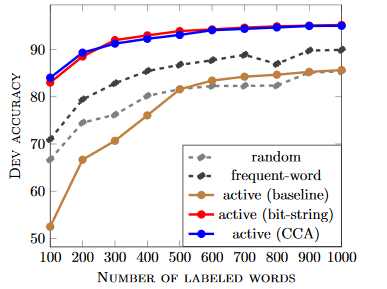

In the effort, we refined some prior work on representing words as vectors and semi-supervised learning. In particular, we adapted semi-supervised, active learning similar to Stratos & Collins 2015 using enhancements to the canonical correlation analysis (CCA) of Dhillon et al 2015 to obtain accurate part of speech tagging, as conveyed in the following graphic from Stratos & Collins:

Parts of Speech

In many NLP pipelines, a “parser” receives its input from the output of a part of speech “tagger”. Most typically in such pipelines, the tagger outputs one part of speech tag for each token (e.g., word) in the input.

We typically permit all the possible parts of speech to be considered during parsing rather than pruning some parts of speech during tagging. This allows us to find the right parse for many sentences that would otherwise be missed. For example, if a part of speech tagger selects the right tag 95% of the time, 1 out of 20 words will have the wrong tag. For text that contains an average of 15 or more words per sentence, relying on such tagging results in parsing failures for too many sentences.

Our client accepted that a more automated approach could not rival curated parses. But we agreed to minimize the initial use of human effort in a phase I approach. Thus, we needed to estimate the probabilities of parts of speech for a domain-specific vocabulary (i.e., for cooking).

The typical part of speech tagger uses a hidden Markov model (HMM) trained over a large corpus of manually tagged text. Our task constraints precluded the human effort to tag much of the recipe text, however.

We needed a method that minimized the investment required for such “supervised” learning…

Simple Semi-Supervised POS Tagging

Stratos and Collins’ MINITAGGER demonstrated the use of active learning to achieve state of the art accuracy while requiring an astonishingly small fraction of the human tagging effort of other approaches. There are few reasons for this impressive result, especially the use of fairly low-dimensional word-embeddings with efficient support vector machinery (SVM).

Distributed Representations of Words

There is a lot of literature on distributed representation of words. Typically, such representations are points in a high-dimensional space in which “similar” words are “close”. Such representations include Word2Vec and GloVe. The former vectors were obtained using neural networks while the latter were derived from co-occurrence matrices (from which GloVe derives the co-occurrence probabilities which are the basis for the algorithm).

In some ways, the distributed representation of words is similar to the use of latent semantic analysis (LSA) to represent the meaning of documents as vectors. LSA, in turn, is similar to principal components analysis (PCA), except that LSA uses term/document frequencies rather than term co-variance. Both use singular value decomposition (SVD) which is also used in canonical correlation analysis (CCA).

Canonical Correlation Analysis (CCA)

CCA determines relationships between two sets of variables without considering either set as dependent or independent, per se. In the case of word embeddings, the vector representations of words comprise one set of variables and the contexts in which those words occur comprise the other.

How we define the context of a word can vary. A context of the immediately preceding word would correspond to a bigram model, one word to each side would correspond to a trigram model, etc. We stuck with the window size of 5 as in the Stratos & Collins and Dhillon et al papers. Longer window sizes do not improve performance on syntactic tasks like part of speech tagging but do provide better representation of semantics for tasks like word sense disambiguation, as suggested in Pennington et al 2014.[3]

Computing the co-occurrence matrices for a vocabulary of V words requires on the order of V3 cells. For our target of at least 300,000 “words” in a multi-giga-word corpus, that’s a large number![4] We adapted Dhillon’s spectral learning toolkit (swell) and its use of the C++ linear algebra package Eigen to get past some memory issues and produced various sets of word vectors of dimensionality from 50, as used in Stratos & Collins to 300, as in GloVe and other representations.[5]

We evaluated the longer ones subjectively and found them to be state of the art, as expected given Dhillon et al 2015. We used the shorter ones to train a support vector machine (SVM) roughly as in MINITAGGER and as described below.

We also experimented with a variety of techniques to improve the resulting word vectors, including tokenization of several dozen different kinds of tokens (e.g., numbers or identifiers of various types, dates, times) and careful consideration of case rather than ubiquitous case folding. These adjustments to the co-occurrence matrices resulted in subjectively superior word vectors in smaller dimensionality or when given a smaller corpus. (The selected corpus has only a few million words for 10,000 recipes.)

Support Vector Machine (SVM)

Using the libSVM package and the following features, most of which are as in MINITAGGER (albeit within a Java package), we enjoyed the performance of the resulting active learning system, which would be impractical using less rapidly trainable alternatives, as suggested in Stratos & Collins 2015.

- One of several case features for the focal word

- One of several token type features for the focal word

- Prefixes and suffixes up to length 4 of the focal word

- 50-dimensional eigen vectors for each of 5 words

- Optional part of speech for each of 5 words

So now we can take a modest corpus in new domains and train an excellent part of speech tagger very easily. And we can improve its performance as much as needed using active training.

Further Reading

For more background, see our recent post, Of Kalman Filtering and Hidden Markov Models, especially the comments by authors from BBN on the need for such active learning in some contexts.

Also consider the following from Dhillon et al 2015:

- The most classic and successful algorithm for learning word embeddings is Latent Semantic Analysis (LSA), which works by performing SVD on the word by document matrix.

- Unfortunately, the state-of-the-art embedding methods suffer from a number of short-comings:

- They are slow to train (especially, the Deep Learning based approaches).

- They are sensitive to the scaling of the embeddings (e.g., LSA/PCA)

And the following from Rastogi et al 2015 (poster):

- LSA is an application of PCA to a single term-document co-occurrence matrix. CCA learns linear projections that are maximally correlated to each other from two views, Generalized CCA is a family of extensions of CCA to maximize correlation across multiple views.

- Multiview LSA is a way of utilizing hundreds of data sources to learn representations for millions of words/phrases that outperform baselines like Word2Vec and Glove.

[1] There were other challenges, one of which was the unique vocabulary of cooking, including various words and phrases from multiple languages. Another was the lack of grammatical structure in the web site content. The content was much less formal than Wikipedia and not much more formal than Twitter. It was rife with spelling errors, little or no discernable grammar, odd formatting, punctuation, etc.

[2] Toutanova et al 2003 describe the Stanford POS tagger as follows: “… a part-of-speech tagger with a per-position tag accuracy of 97.24%, and a whole-sentence correct rate of 56.34% on Penn Treebank WSJ data. This is the best automatically learned part-of-speech tagging result known to us…”

[3] The GloVe paper demonstrated that an asymmetric window (i.e., shifted “left”) does not work as well for syntactic tasks, such as part of speech tagging (as seems intuitive and as suggested in Tautanova et al 2003).

[4] The cooking corpus had only a few million words, so a much smaller “vocabulary” was fine there.

[5] MINITAGGER is written in Python and uses MATLAB which we needed to replace for commercial reasons.