I regularly build deep learning models for natural language processing and today I gave one a try that has been the leader in the Stanford Question Answering Dataset (SQuAD). This one is a impressive NLP platform built using PyTorch. But it’s still missing the big picture (i.e., it doesn’t “know” much).

Generally, NLP systems that emphasize Big Data (e.g., deep learning approaches) but eschew more explicit knowledge representation and reasoning are interesting but unintelligent. Think Siri and Alexa, for example. They might get a simple factoid question if a Google search can find closely related text, but not much more.

Here is a simple demonstration of problems that the state of the art in deep machine learning is far from solving…

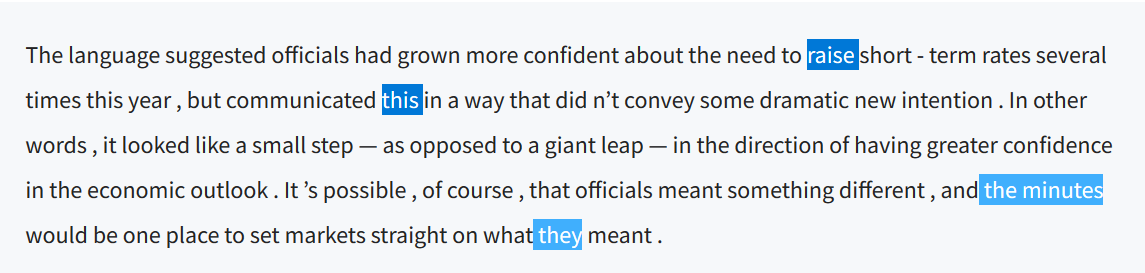

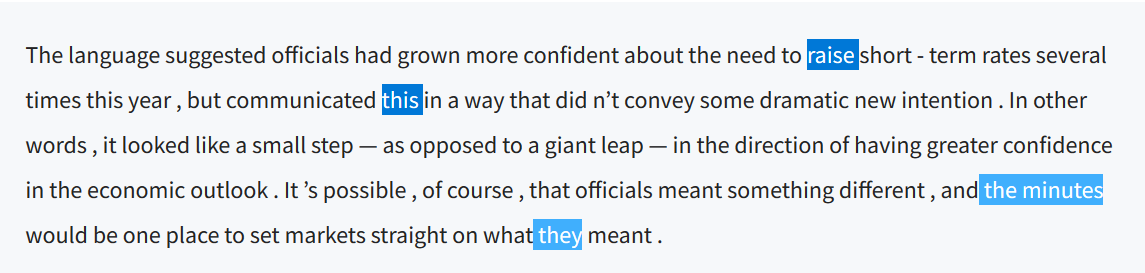

Here is a paragraph from a Wall Street Journal article about the Fed today where the deep learning system has “found” what the pronouns “this” and “they” reference:

The essential point here is that the deep learning system is missing common sense. It is “the need”, not “a raise” that is referenced by “this”. And “they” references “officials”, not “the minutes”.

Bottom line: if you need your natural language understanding system to be smarter than this, you are not going to get there using deep learning alone.