In the spring of 2012, Vulcan engaged Automata for a knowledge acquisition (KA) experiment. This article provides background on the context of that experiment and what the results portend for artificial intelligence applications, especially in the areas of education. Vulcan presented some of the award-winning work referenced here at an AI conference, including a demonstration of the electronic textbook discussed below. There is a video of that presentation here. The introductory remarks are interesting but not pertinent to this article.

Background on Vulcan’s Project Halo

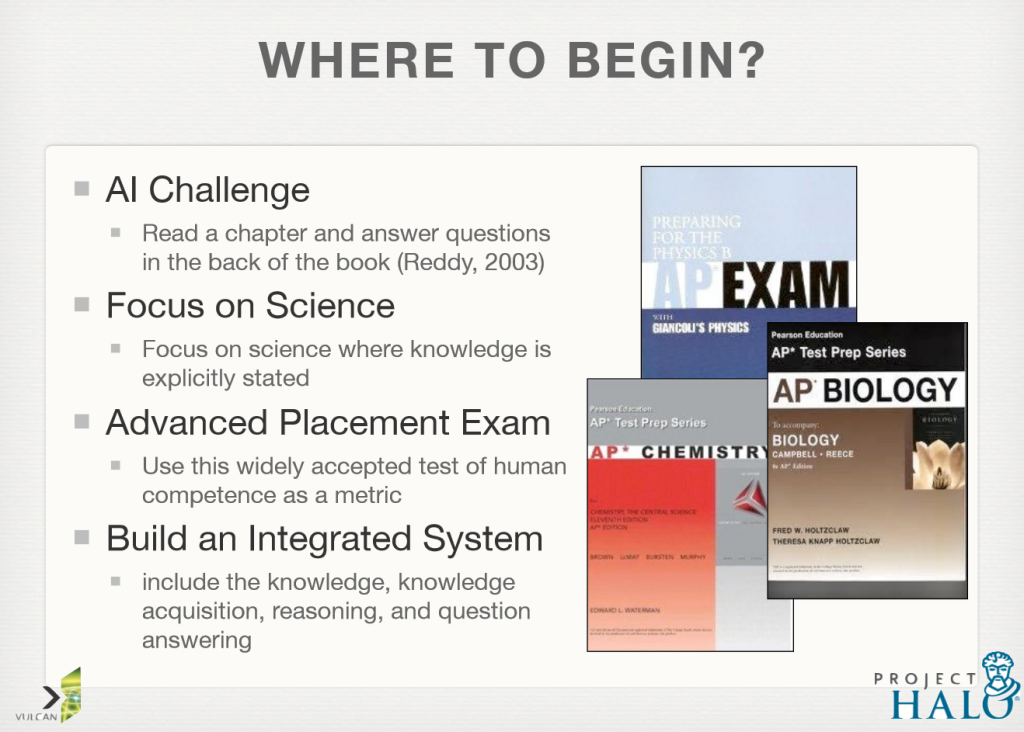

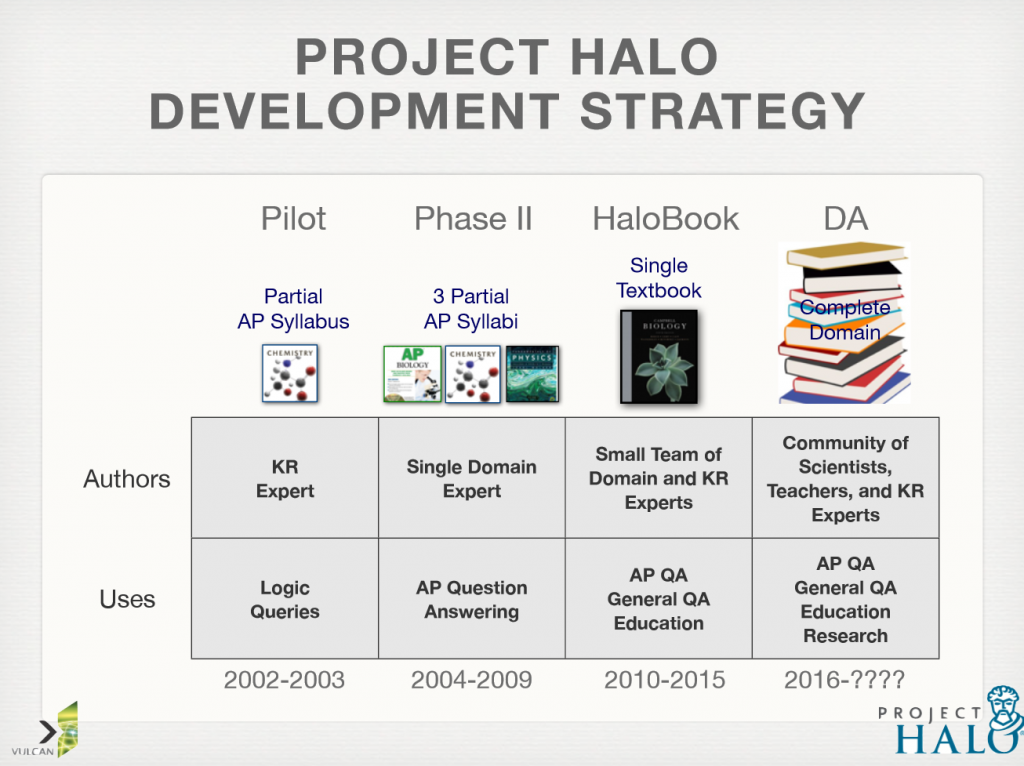

From 2002 to 2004, Vulcan developed a Halo Pilot that could correctly answer between 30% and 50% of the questions on advanced placement (AP) tests in chemistry. The approaches relied on sophisticated approaches to formal knowledge representation and expert knowledge engineering. Of three teams, Cycorp fared the worst and SRI fared the best in this competition. SRI’s system performed at the level of scoring a 3 on the AP, which corresponds to earning course credit at many universities. The consensus view at that time was that achieving a score of 4 on the AP was feasible with limited additional effort. However, the cost per page for this level of performance was roughly $10,000, which needed to be reduced significantly before Vulcan’s objective of a Digital Aristotle could be considered viable.

Background on Vulcan’s Aura system

In 2004, Vulcan initiated phase II of Project Halo focused on enabling domain experts to develop systems capable of answering AP questions in chemistry, physics, and biology. The principal objective of this research was to determine the viability of reducing the cost of knowledge acquisition (KA) significantly while hopefully improving AP test performance. This resulted in Vulcan’s Aura system during 2007.

Experiments conducted during 2008 and 2009 showed that after two months of training and use of Aura, subject matter experts (SMEs) were able to encode knowledge sufficient to answer roughly 75% of AP questions in biology using Aura.

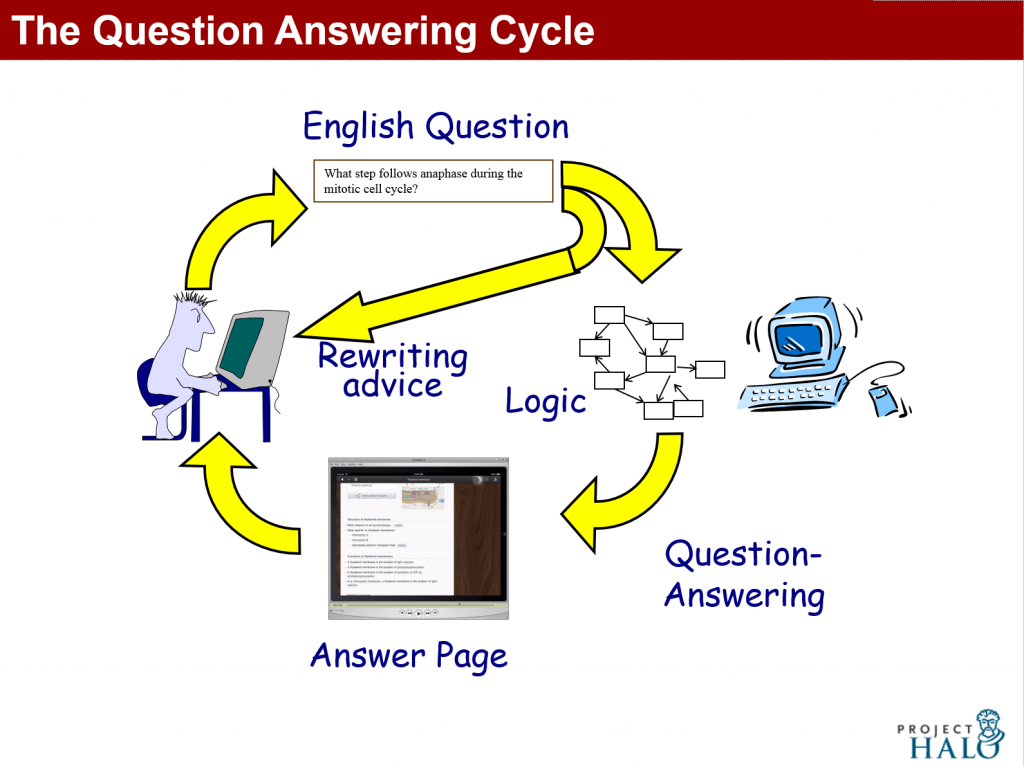

The knowledge acquisition (KA) effort required was roughly 15 hours per page on average, whether by knowledge engineers (KEs) or SMEs. This level of performance came with caveats, however. First, questions had to be reformulated from natural language into a controlled syntax using precise terminology, which required several attempts and reformulations of questions by users.

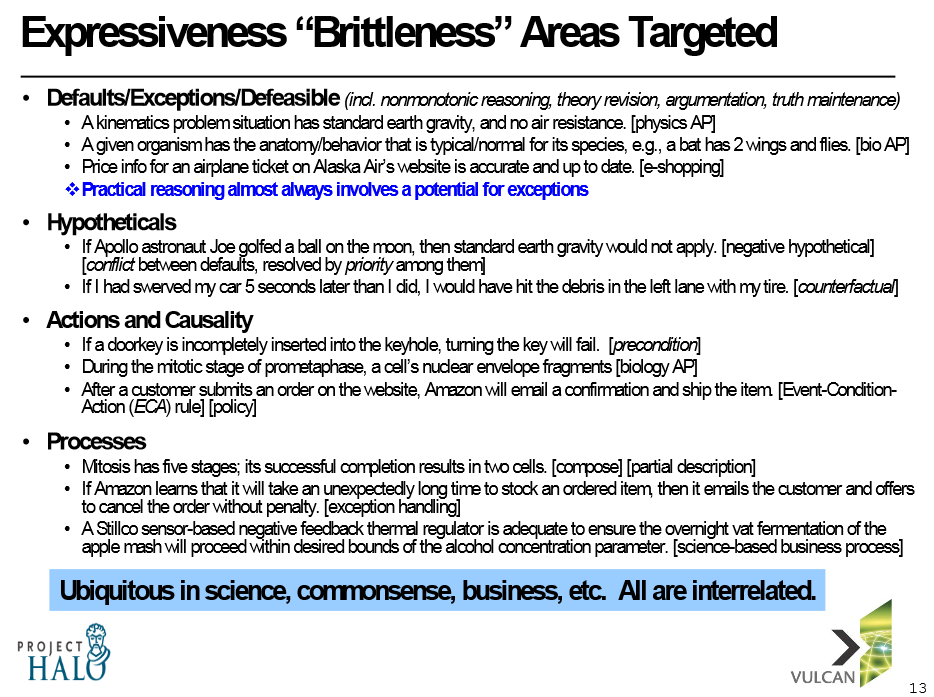

Second, certain limitations of Aura’s knowledge representation and reasoning (KR&R) limited performance below 80% correctness. The latter weakness motivated Vulcan to begin developing SILK by 2008. In particular, SILK targets the following areas:

While working towards more powerful KR&R, Vulcan continued the experiment with Aura by focusing more broadly and deeply within the domain that raised the fewest immediate KR&R challenges. Specifically, Vulcan scaled up the effort to cover all 59 chapters of Campbell’s Biology from Pearson; roughly 30 times the 40 pages of knowledge acquired during the initial experiment.

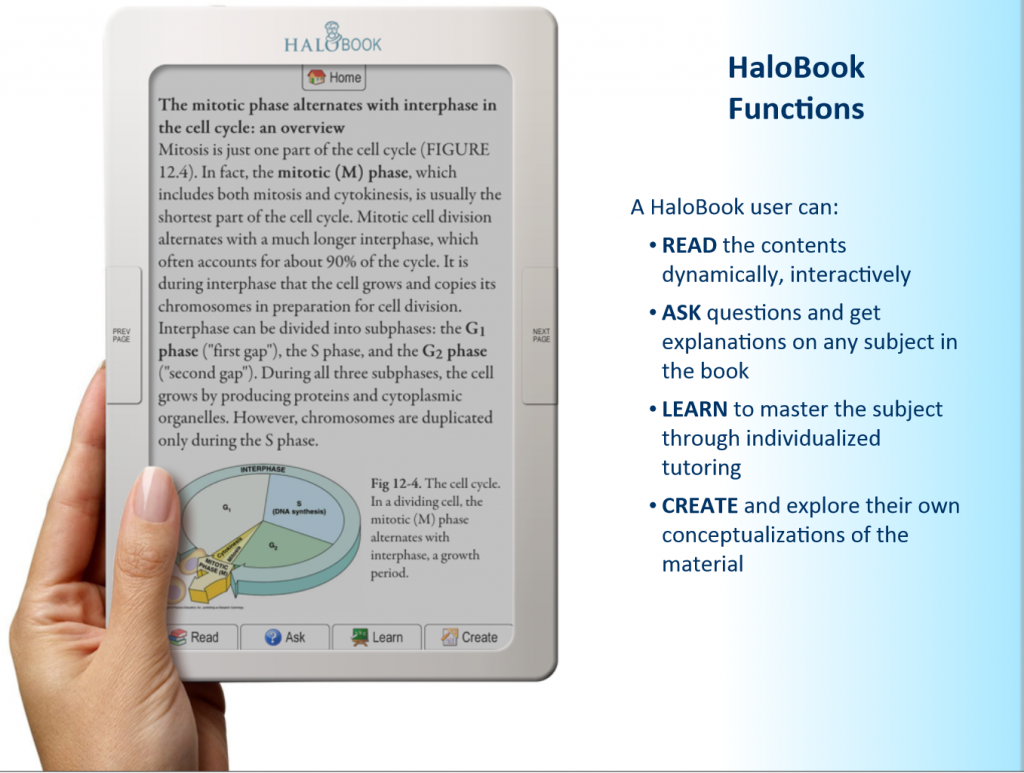

In parallel with scaling up coverage, Vulcan developed the concept of a HaloBook as discussed in Paul Allen’s memoir, Idea Man. In 2010, a prototype called Inquire was developed as an electronic textbook application for Apple’s iPad. Inquire integrates questions and answers into the electronic textbook, enriching its content with conceptual structure and meaningful relationships using knowledge captured using Aura, effectively transforming a textbook in to a semantic platform for intelligent tutoring.

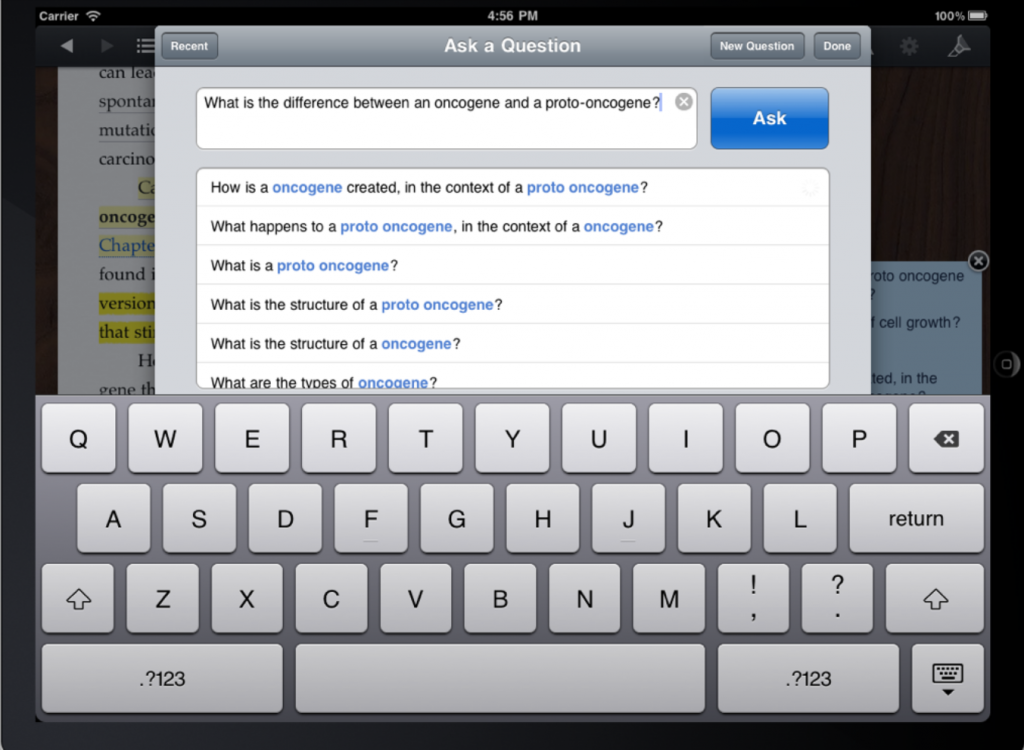

The Inquire prototype avoids the issue of question (re)formulation discussed above by showing questions that appear to be related to the questions users ask. This approach avoids having to understand questions and provides users guidance concerning what they are asking about.

In 2012, 72 freshman at De Anza College found that Inquire users, unlike students in control groups, obtained no grade lower than a C. One of the perceived benefits was that students could ask questions that they might otherwise be embarrassed to ask a teacher. You can learn more about the application in the following video.

The knowledge acquisition bottleneck

During 2010 and 2011, evidence began to accumulate indicating that the cost of knowledge acquisition using Aura was not falling enough for commericial viability. Although Aura could capture most of the knowledge in the text and encode background knowledge sufficient to answer roughly two thirds of reformulated AP exam questions, the cost for this level of performance remained above several thousand dollars per page. For a textbook like Campbell’s biology, this would be in the millions of dollars. Although the resulting application could be profitable for such an expensive, high-volume textbook, the viability of acquiring the knowledge in hundreds of textbooks for deep question answer (QA) had not been established. Consequently, in 2012, Vulcan commissioned a KA experiment in which knowledge would be captured from English rather than using Aura’s graphical approach.

Project Sherlock

In the KA experiment, Automata’s patent-pending Linguist™ software was used to translate English sentences into formal logic and to translate the resulting logic into the maturing SILK system. Each of the sentences in a chapter of the textbook was interpreted using the Linguist. Then, each of the sentences was formalized into a logical axiom. Finally, the logical representation was translated into the syntax required by the SILK system.

The Linguist™

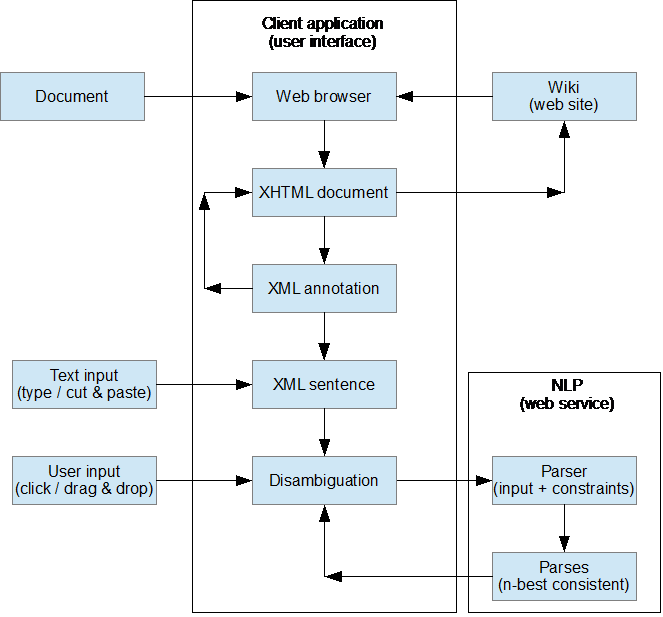

The Linguist uses patent-pending methods to curate text into more formal logical semantics. Essentially, the Linguist accumulates knowledge from the web (or imported documents) into a wiki-like platform and annotates the content with precise lexical, syntactic, semantic, and logical information. The accumulated logical semantics are subsequently available for processing using semantic web and logical technologies. The core function of the Linguist, aside from annotation, is to engage users in disambiguating the meaning of sentences, as shown below.

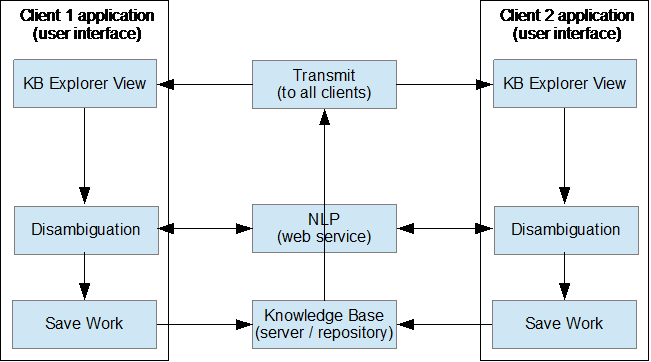

The client application used in the KA experiment is a Java-based web application called KnowBuddy™ which uses a natural language processing (NLP) web service and a knowledge base service implemented in Java. The knowledge base server maintains a repository of the knowledge acquired with change management functionality, such as recording a history of user actions, including vocabulary, ontology, and sentence editing and histories. The clients and server cooperate in distributing information about ongoing activities across users as shown below.

In the KA experiment, the Linguist used PET, an open-source, natural language processing (NLP) system implemented in C++ running as a web service under Linux and the English Resource Grammar (ERG), a large-vocabulary, high-precision, unification-based constituency grammar (other NLP systems, such as those which use statistical dependency grammars can also be supported).

Thanks for sharing this, it was a fun read!

peter